This is the multi-page printable view of this section. Click here to print.

Agile Live

- 1: Overview

- 2: Introduction to Agile Live technology

- 3: Installation and configuration

- 3.1: Hardware examples

- 3.2: The System Controller

- 3.3: The base platform

- 3.3.1: NTP

- 3.3.2: Linux settings

- 3.3.3: Disabling unattended upgrades of CUDA toolkit

- 3.3.4: Installing older releases

- 3.4: Configuration and monitoring GUI

- 3.4.1: Custom MongoDB installation

- 3.5: Prometheus and Grafana for monitoring

- 3.6: Control panel GUI examples

- 4: Tutorials and How-To's

- 4.1: Getting Started

- 4.2: Using the configuration-and-monitoring GUI

- 4.3: Viewing the multiview and outputs

- 4.4: Creating a video mixer control panel in Companion

- 4.5: Using the audio control GUI

- 4.6: Setting up multi-view outputs using the REST API

- 4.7: Security in Agile Live

- 4.8: Statistics in Agile Live

- 4.9: Using Media Player metadata in HTML pages

- 5: Releases

- 5.1: Release 7.0.0

- 5.2: Release 6.0.0

- 5.3: Release 5.0.0

- 5.4: Release 4.0.0

- 5.5: Release 3.0.0

- 5.6: Release 2.0.0

- 5.7: Release 1.0.0

- 5.8: Upgrade instructions

- 6: Reference

- 6.1: Agile Live 7.0.0 developer reference

- 6.1.1: REST API v3

- 6.1.2: Agile Live Rendering Engine configuration documentation

- 6.1.3: Agile Live Rendering Engine command documentation

- 6.1.4: C++ SDK

- 6.1.4.1: Classes

- 6.1.4.1.1: AclLog::CommandLogFormatter

- 6.1.4.1.2: AclLog::FileLocationFormatterFlag

- 6.1.4.1.3: AclLog::ThreadNameFormatterFlag

- 6.1.4.1.4: AlignedAudioFrame

- 6.1.4.1.5: AlignedFrame

- 6.1.4.1.6: ControlDataCommon::ConnectionStatus

- 6.1.4.1.7: ControlDataCommon::Response

- 6.1.4.1.8: ControlDataCommon::StatusMessage

- 6.1.4.1.9: ControlDataSender

- 6.1.4.1.10: ControlDataSender::Settings

- 6.1.4.1.11: DeviceMemory

- 6.1.4.1.12: IControlDataReceiver

- 6.1.4.1.13: IControlDataReceiver::IncomingRequest

- 6.1.4.1.14: IControlDataReceiver::ReceiverResponse

- 6.1.4.1.15: IControlDataReceiver::Settings

- 6.1.4.1.16: IMediaStreamer

- 6.1.4.1.17: IMediaStreamer::Configuration

- 6.1.4.1.18: IMediaStreamer::Settings

- 6.1.4.1.19: IngestApplication

- 6.1.4.1.20: IngestApplication::Settings

- 6.1.4.1.21: ISystemControllerInterface

- 6.1.4.1.22: ISystemControllerInterface::Callbacks

- 6.1.4.1.23: ISystemControllerInterface::Response

- 6.1.4.1.24: MediaReceiver

- 6.1.4.1.25: MediaReceiver::NewStreamParameters

- 6.1.4.1.26: MediaReceiver::Settings

- 6.1.4.1.27: SystemControllerConnection

- 6.1.4.1.28: SystemControllerConnection::Settings

- 6.1.4.1.29: TimeCommon::TAIStatus

- 6.1.4.1.30: TimeCommon::TimeStructure

- 6.1.4.2: Files

- 6.1.4.2.1: include/AclLog.h

- 6.1.4.2.2: include/AlignedFrame.h

- 6.1.4.2.3: include/Base64.h

- 6.1.4.2.4: include/ControlDataCommon.h

- 6.1.4.2.5: include/ControlDataSender.h

- 6.1.4.2.6: include/DeviceMemory.h

- 6.1.4.2.7: include/IControlDataReceiver.h

- 6.1.4.2.8: include/IMediaStreamer.h

- 6.1.4.2.9: include/IngestApplication.h

- 6.1.4.2.10: include/IngestUtils.h

- 6.1.4.2.11: include/ISystemControllerInterface.h

- 6.1.4.2.12: include/MediaReceiver.h

- 6.1.4.2.13: include/PixelFormat.h

- 6.1.4.2.14: include/SystemControllerConnection.h

- 6.1.4.2.15: include/TimeCommon.h

- 6.1.4.2.16: include/UUIDUtils.h

- 6.1.4.3: Namespaces

- 6.1.4.3.1: ACL

- 6.1.4.3.2: AclLog

- 6.1.4.3.3: ControlDataCommon

- 6.1.4.3.4: IngestUtils

- 6.1.4.3.5: spdlog

- 6.1.4.3.6: TimeCommon

- 6.1.4.3.7: UUIDUtils

- 6.1.5: System controller config

- 6.2: Agile Live 6.0.0 developer reference

- 6.2.1: REST API v2

- 6.2.2: Agile Live Rendering Engine command documentation

- 6.2.3: C++ SDK

- 6.2.3.1: Classes

- 6.2.3.1.1: AclLog::CommandLogFormatter

- 6.2.3.1.2: AclLog::FileLocationFormatterFlag

- 6.2.3.1.3: AclLog::ThreadNameFormatterFlag

- 6.2.3.1.4: AlignedAudioFrame

- 6.2.3.1.5: AlignedFrame

- 6.2.3.1.6: ControlDataCommon::ConnectionStatus

- 6.2.3.1.7: ControlDataCommon::Response

- 6.2.3.1.8: ControlDataCommon::StatusMessage

- 6.2.3.1.9: ControlDataReceiver

- 6.2.3.1.10: ControlDataReceiver::IncomingRequest

- 6.2.3.1.11: ControlDataReceiver::ReceiverResponse

- 6.2.3.1.12: ControlDataReceiver::Settings

- 6.2.3.1.13: ControlDataSender

- 6.2.3.1.14: ControlDataSender::Settings

- 6.2.3.1.15: DeviceMemory

- 6.2.3.1.16: IngestApplication

- 6.2.3.1.17: IngestApplication::Settings

- 6.2.3.1.18: ISystemControllerInterface

- 6.2.3.1.19: ISystemControllerInterface::Callbacks

- 6.2.3.1.20: ISystemControllerInterface::Response

- 6.2.3.1.21: MediaReceiver

- 6.2.3.1.22: MediaReceiver::NewStreamParameters

- 6.2.3.1.23: MediaReceiver::Settings

- 6.2.3.1.24: MediaStreamer

- 6.2.3.1.25: MediaStreamer::Configuration

- 6.2.3.1.26: PipelineSystemControllerInterfaceFactory

- 6.2.3.1.27: SystemControllerConnection

- 6.2.3.1.28: SystemControllerConnection::Settings

- 6.2.3.1.29: TimeCommon::TAIStatus

- 6.2.3.1.30: TimeCommon::TimeStructure

- 6.2.3.2: Files

- 6.2.3.2.1: include/AclLog.h

- 6.2.3.2.2: include/AlignedFrame.h

- 6.2.3.2.3: include/Base64.h

- 6.2.3.2.4: include/ControlDataCommon.h

- 6.2.3.2.5: include/ControlDataReceiver.h

- 6.2.3.2.6: include/ControlDataSender.h

- 6.2.3.2.7: include/DeviceMemory.h

- 6.2.3.2.8: include/IngestApplication.h

- 6.2.3.2.9: include/IngestUtils.h

- 6.2.3.2.10: include/ISystemControllerInterface.h

- 6.2.3.2.11: include/MediaReceiver.h

- 6.2.3.2.12: include/MediaStreamer.h

- 6.2.3.2.13: include/PipelineSystemControllerInterfaceFactory.h

- 6.2.3.2.14: include/PixelFormat.h

- 6.2.3.2.15: include/SystemControllerConnection.h

- 6.2.3.2.16: include/TimeCommon.h

- 6.2.3.2.17: include/UUIDUtils.h

- 6.2.3.3: Namespaces

- 6.2.3.3.1: ACL

- 6.2.3.3.2: AclLog

- 6.2.3.3.3: ControlDataCommon

- 6.2.3.3.4: IngestUtils

- 6.2.3.3.5: spdlog

- 6.2.3.3.6: TimeCommon

- 6.2.3.3.7: UUIDUtils

- 6.2.4: System controller config

- 6.3: Agile Live 5.0.0 developer reference

- 6.3.1: REST API v2

- 6.3.2: Agile Live Rendering Engine command documentation

- 6.3.3: C++ SDK

- 6.3.3.1: Classes

- 6.3.3.1.1: AclLog::CommandLogFormatter

- 6.3.3.1.2: AclLog::FileLocationFormatterFlag

- 6.3.3.1.3: AclLog::ThreadNameFormatterFlag

- 6.3.3.1.4: AlignedAudioFrame

- 6.3.3.1.5: AlignedFrame

- 6.3.3.1.6: ControlDataCommon::ConnectionStatus

- 6.3.3.1.7: ControlDataCommon::Response

- 6.3.3.1.8: ControlDataCommon::StatusMessage

- 6.3.3.1.9: ControlDataReceiver

- 6.3.3.1.10: ControlDataReceiver::IncomingRequest

- 6.3.3.1.11: ControlDataReceiver::ReceiverResponse

- 6.3.3.1.12: ControlDataReceiver::Settings

- 6.3.3.1.13: ControlDataSender

- 6.3.3.1.14: ControlDataSender::Settings

- 6.3.3.1.15: DeviceMemory

- 6.3.3.1.16: IngestApplication

- 6.3.3.1.17: IngestApplication::Settings

- 6.3.3.1.18: ISystemControllerInterface

- 6.3.3.1.19: ISystemControllerInterface::Callbacks

- 6.3.3.1.20: ISystemControllerInterface::Response

- 6.3.3.1.21: MediaReceiver

- 6.3.3.1.22: MediaReceiver::NewStreamParameters

- 6.3.3.1.23: MediaReceiver::Settings

- 6.3.3.1.24: MediaStreamer

- 6.3.3.1.25: MediaStreamer::Configuration

- 6.3.3.1.26: PipelineSystemControllerInterfaceFactory

- 6.3.3.1.27: SystemControllerConnection

- 6.3.3.1.28: SystemControllerConnection::Settings

- 6.3.3.1.29: TimeCommon::TAIStatus

- 6.3.3.1.30: TimeCommon::TimeStructure

- 6.3.3.2: Files

- 6.3.3.2.1: include/AclLog.h

- 6.3.3.2.2: include/AlignedFrame.h

- 6.3.3.2.3: include/Base64.h

- 6.3.3.2.4: include/ControlDataCommon.h

- 6.3.3.2.5: include/ControlDataReceiver.h

- 6.3.3.2.6: include/ControlDataSender.h

- 6.3.3.2.7: include/DeviceMemory.h

- 6.3.3.2.8: include/IngestApplication.h

- 6.3.3.2.9: include/IngestUtils.h

- 6.3.3.2.10: include/ISystemControllerInterface.h

- 6.3.3.2.11: include/MediaReceiver.h

- 6.3.3.2.12: include/MediaStreamer.h

- 6.3.3.2.13: include/PipelineSystemControllerInterfaceFactory.h

- 6.3.3.2.14: include/SystemControllerConnection.h

- 6.3.3.2.15: include/TimeCommon.h

- 6.3.3.2.16: include/UUIDUtils.h

- 6.3.3.3: Namespaces

- 6.3.3.3.1: AclLog

- 6.3.3.3.2: ControlDataCommon

- 6.3.3.3.3: IngestUtils

- 6.3.3.3.4: spdlog

- 6.3.3.3.5: TimeCommon

- 6.3.3.3.6: UUIDUtils

- 6.3.4: System controller config

- 6.4: Agile Live 4.0.0 developer reference

- 6.4.1: REST API v2

- 6.4.2: Agile Live Rendering Engine command documentation

- 6.4.3: C++ SDK

- 6.4.3.1: Classes

- 6.4.3.1.1: AclLog::CommandLogFormatter

- 6.4.3.1.2: AlignedAudioFrame

- 6.4.3.1.3: AlignedFrame

- 6.4.3.1.4: ControlDataCommon::ConnectionStatus

- 6.4.3.1.5: ControlDataCommon::Response

- 6.4.3.1.6: ControlDataCommon::StatusMessage

- 6.4.3.1.7: ControlDataReceiver

- 6.4.3.1.8: ControlDataReceiver::IncomingRequest

- 6.4.3.1.9: ControlDataReceiver::ReceiverResponse

- 6.4.3.1.10: ControlDataReceiver::Settings

- 6.4.3.1.11: ControlDataSender

- 6.4.3.1.12: ControlDataSender::Settings

- 6.4.3.1.13: DeviceMemory

- 6.4.3.1.14: IngestApplication

- 6.4.3.1.15: IngestApplication::Settings

- 6.4.3.1.16: ISystemControllerInterface

- 6.4.3.1.17: ISystemControllerInterface::Callbacks

- 6.4.3.1.18: ISystemControllerInterface::Response

- 6.4.3.1.19: MediaReceiver

- 6.4.3.1.20: MediaReceiver::NewStreamParameters

- 6.4.3.1.21: MediaReceiver::Settings

- 6.4.3.1.22: MediaStreamer

- 6.4.3.1.23: MediaStreamer::Configuration

- 6.4.3.1.24: PipelineSystemControllerInterfaceFactory

- 6.4.3.1.25: SystemControllerConnection

- 6.4.3.1.26: SystemControllerConnection::Settings

- 6.4.3.1.27: TimeCommon::TAIStatus

- 6.4.3.1.28: TimeCommon::TimeStructure

- 6.4.3.2: Files

- 6.4.3.2.1: include/AclLog.h

- 6.4.3.2.2: include/AlignedFrame.h

- 6.4.3.2.3: include/Base64.h

- 6.4.3.2.4: include/ControlDataCommon.h

- 6.4.3.2.5: include/ControlDataReceiver.h

- 6.4.3.2.6: include/ControlDataSender.h

- 6.4.3.2.7: include/DeviceMemory.h

- 6.4.3.2.8: include/IngestApplication.h

- 6.4.3.2.9: include/IngestUtils.h

- 6.4.3.2.10: include/ISystemControllerInterface.h

- 6.4.3.2.11: include/MediaReceiver.h

- 6.4.3.2.12: include/MediaStreamer.h

- 6.4.3.2.13: include/PipelineSystemControllerInterfaceFactory.h

- 6.4.3.2.14: include/SystemControllerConnection.h

- 6.4.3.2.15: include/TimeCommon.h

- 6.4.3.2.16: include/UUIDUtils.h

- 6.4.3.3: Namespaces

- 6.4.3.3.1: AclLog

- 6.4.3.3.2: ControlDataCommon

- 6.4.3.3.3: IngestUtils

- 6.4.3.3.4: spdlog

- 6.4.3.3.5: TimeCommon

- 6.4.3.3.6: UUIDUtils

- 6.4.4: System controller config

- 6.5: Agile Live 3.0.0 developer reference

- 6.5.1: REST API v2

- 6.5.2: Agile Live Rendering Engine command documentation

- 6.5.3: C++ SDK

- 6.5.3.1: Classes

- 6.5.3.1.1: AlignedFrame

- 6.5.3.1.2: ControlDataCommon::ConnectionStatus

- 6.5.3.1.3: ControlDataCommon::Response

- 6.5.3.1.4: ControlDataCommon::StatusMessage

- 6.5.3.1.5: ControlDataReceiver

- 6.5.3.1.6: ControlDataReceiver::IncomingRequest

- 6.5.3.1.7: ControlDataReceiver::ReceiverResponse

- 6.5.3.1.8: ControlDataReceiver::Settings

- 6.5.3.1.9: ControlDataSender

- 6.5.3.1.10: ControlDataSender::Settings

- 6.5.3.1.11: DeviceMemory

- 6.5.3.1.12: IngestApplication

- 6.5.3.1.13: IngestApplication::Settings

- 6.5.3.1.14: ISystemControllerInterface

- 6.5.3.1.15: ISystemControllerInterface::Callbacks

- 6.5.3.1.16: ISystemControllerInterface::Response

- 6.5.3.1.17: MediaReceiver

- 6.5.3.1.18: MediaReceiver::NewStreamParameters

- 6.5.3.1.19: MediaReceiver::Settings

- 6.5.3.1.20: MediaStreamer

- 6.5.3.1.21: MediaStreamer::Configuration

- 6.5.3.1.22: PipelineSystemControllerInterfaceFactory

- 6.5.3.1.23: SystemControllerConnection

- 6.5.3.1.24: SystemControllerConnection::Settings

- 6.5.3.1.25: TimeCommon::TAIStatus

- 6.5.3.1.26: TimeCommon::TimeStructure

- 6.5.3.2: Files

- 6.5.3.2.1: include/AclLog.h

- 6.5.3.2.2: include/AlignedFrame.h

- 6.5.3.2.3: include/Base64.h

- 6.5.3.2.4: include/ControlDataCommon.h

- 6.5.3.2.5: include/ControlDataReceiver.h

- 6.5.3.2.6: include/ControlDataSender.h

- 6.5.3.2.7: include/DeviceMemory.h

- 6.5.3.2.8: include/IngestApplication.h

- 6.5.3.2.9: include/IngestUtils.h

- 6.5.3.2.10: include/ISystemControllerInterface.h

- 6.5.3.2.11: include/MediaReceiver.h

- 6.5.3.2.12: include/MediaStreamer.h

- 6.5.3.2.13: include/PipelineSystemControllerInterfaceFactory.h

- 6.5.3.2.14: include/SystemControllerConnection.h

- 6.5.3.2.15: include/TimeCommon.h

- 6.5.3.2.16: include/UUIDUtils.h

- 6.5.3.3: Namespaces

- 6.5.3.3.1: AclLog

- 6.5.3.3.2: ControlDataCommon

- 6.5.3.3.3: IngestUtils

- 6.5.3.3.4: TimeCommon

- 6.5.3.3.5: UUIDUtils

- 6.5.4: System controller config

- 6.6: Agile Live 2.0.0 developer reference

- 6.6.1: REST API v1

- 6.6.2: Agile Live Rendering Engine command documentation

- 6.6.3: C++ SDK

- 6.6.3.1: Classes

- 6.6.3.1.1: AlignedFrame

- 6.6.3.1.2: ControlDataCommon::ConnectionStatus

- 6.6.3.1.3: ControlDataCommon::Response

- 6.6.3.1.4: ControlDataCommon::StatusMessage

- 6.6.3.1.5: ControlDataReceiver

- 6.6.3.1.6: ControlDataReceiver::IncomingRequest

- 6.6.3.1.7: ControlDataReceiver::ReceiverResponse

- 6.6.3.1.8: ControlDataReceiver::Settings

- 6.6.3.1.9: ControlDataSender

- 6.6.3.1.10: ControlDataSender::Settings

- 6.6.3.1.11: DeviceMemory

- 6.6.3.1.12: IngestApplication

- 6.6.3.1.13: IngestApplication::Settings

- 6.6.3.1.14: ISystemControllerInterface

- 6.6.3.1.15: ISystemControllerInterface::Callbacks

- 6.6.3.1.16: ISystemControllerInterface::Response

- 6.6.3.1.17: MediaReceiver

- 6.6.3.1.18: MediaReceiver::NewStreamParameters

- 6.6.3.1.19: MediaReceiver::Settings

- 6.6.3.1.20: MediaStreamer

- 6.6.3.1.21: MediaStreamer::Configuration

- 6.6.3.1.22: PipelineSystemControllerInterfaceFactory

- 6.6.3.1.23: SystemControllerConnection

- 6.6.3.1.24: SystemControllerConnection::Settings

- 6.6.3.1.25: TimeCommon::TAIStatus

- 6.6.3.1.26: TimeCommon::TimeStructure

- 6.6.3.2: Files

- 6.6.3.2.1: include/AclLog.h

- 6.6.3.2.2: include/AlignedFrame.h

- 6.6.3.2.3: include/Base64.h

- 6.6.3.2.4: include/ControlDataCommon.h

- 6.6.3.2.5: include/ControlDataReceiver.h

- 6.6.3.2.6: include/ControlDataSender.h

- 6.6.3.2.7: include/DeviceMemory.h

- 6.6.3.2.8: include/IngestApplication.h

- 6.6.3.2.9: include/IngestUtils.h

- 6.6.3.2.10: include/ISystemControllerInterface.h

- 6.6.3.2.11: include/MediaReceiver.h

- 6.6.3.2.12: include/MediaStreamer.h

- 6.6.3.2.13: include/PipelineSystemControllerInterfaceFactory.h

- 6.6.3.2.14: include/SystemControllerConnection.h

- 6.6.3.2.15: include/TimeCommon.h

- 6.6.3.2.16: include/UUIDUtils.h

- 6.6.3.3: Namespaces

- 6.6.3.3.1: AclLog

- 6.6.3.3.2: ControlDataCommon

- 6.6.3.3.3: IngestUtils

- 6.6.3.3.4: TimeCommon

- 6.6.3.3.5: UUIDUtils

- 6.6.4: System controller config

- 6.7: Agile Live 1.0.0 developer reference

- 6.7.1: REST API v1

- 6.7.2: C++ SDK

- 6.7.2.1: Classes

- 6.7.2.1.1: AlignedData::DataFrame

- 6.7.2.1.2: ControlDataCommon::ConnectionStatus

- 6.7.2.1.3: ControlDataCommon::Response

- 6.7.2.1.4: ControlDataCommon::StatusMessage

- 6.7.2.1.5: ControlDataReceiver

- 6.7.2.1.6: ControlDataReceiver::IncomingRequest

- 6.7.2.1.7: ControlDataReceiver::ReceiverResponse

- 6.7.2.1.8: ControlDataReceiver::Settings

- 6.7.2.1.9: ControlDataSender

- 6.7.2.1.10: ControlDataSender::Settings

- 6.7.2.1.11: IngestApplication

- 6.7.2.1.12: IngestApplication::Settings

- 6.7.2.1.13: ISystemControllerInterface

- 6.7.2.1.14: ISystemControllerInterface::Callbacks

- 6.7.2.1.15: ISystemControllerInterface::Response

- 6.7.2.1.16: MediaReceiver

- 6.7.2.1.17: MediaReceiver::NewStreamParameters

- 6.7.2.1.18: MediaReceiver::Settings

- 6.7.2.1.19: MediaStreamer

- 6.7.2.1.20: MediaStreamer::Configuration

- 6.7.2.1.21: SystemControllerConnection

- 6.7.2.1.22: SystemControllerConnection::Settings

- 6.7.2.1.23: TimeCommon::TAIStatus

- 6.7.2.1.24: TimeCommon::TimeStructure

- 6.7.2.2: Files

- 6.7.2.2.1: include/AclLog.h

- 6.7.2.2.2: include/AlignedData.h

- 6.7.2.2.3: include/Base64.h

- 6.7.2.2.4: include/ControlDataCommon.h

- 6.7.2.2.5: include/ControlDataReceiver.h

- 6.7.2.2.6: include/ControlDataSender.h

- 6.7.2.2.7: include/IngestApplication.h

- 6.7.2.2.8: include/IngestUtils.h

- 6.7.2.2.9: include/ISystemControllerInterface.h

- 6.7.2.2.10: include/MediaReceiver.h

- 6.7.2.2.11: include/MediaStreamer.h

- 6.7.2.2.12: include/SystemControllerConnection.h

- 6.7.2.2.13: include/TimeCommon.h

- 6.7.2.2.14: include/UUIDUtils.h

- 6.7.2.3: Namespaces

- 6.7.2.3.1: AclLog

- 6.7.2.3.2: AlignedData

- 6.7.2.3.3: ControlDataCommon

- 6.7.2.3.4: IngestUtils

- 6.7.2.3.5: TimeCommon

- 6.7.2.3.6: UUIDUtils

- 6.7.3: System controller config

- 7: Troubleshooting

- 8: Credits

- 8.1: C++ SDK Credits

- 8.2: REST API Credits

1 - Overview

Agile Live is a software-based platform for production of media using standard, off-the-shelf hardware and datacenter building blocks.

The Agile Live system consists of multiple software components, distributed geographically between recording studios, remote production control rooms and datacenters.

All Agile Live components have the same understanding of the time ‘now’. The time reference used by all software components is TAI (Temps Atomique International), which provides a monotonically, steady time reference no matter the geographic location.

This makes it possible to compensate for differences in relative delay between the media streams. The synchronized timing makes the system unique compared to other solutions usually bound to buffer levels and various queueing mechanisms.

The synchronization of the streams transported over networks with various delays makes it possible to build a distributed production environment, where the mixing of the video and audio sources can take place at a different location compared to the studio where they were captured.

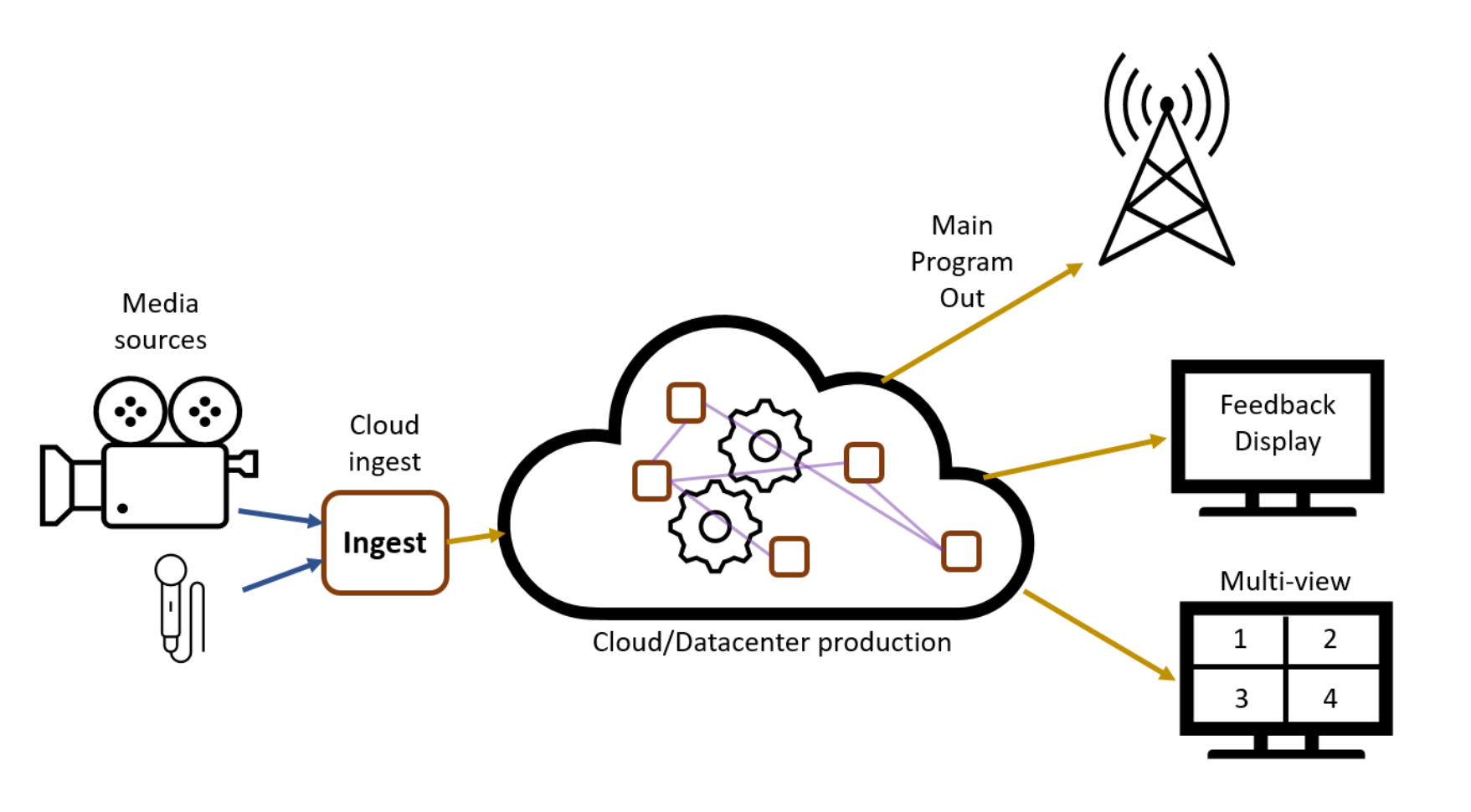

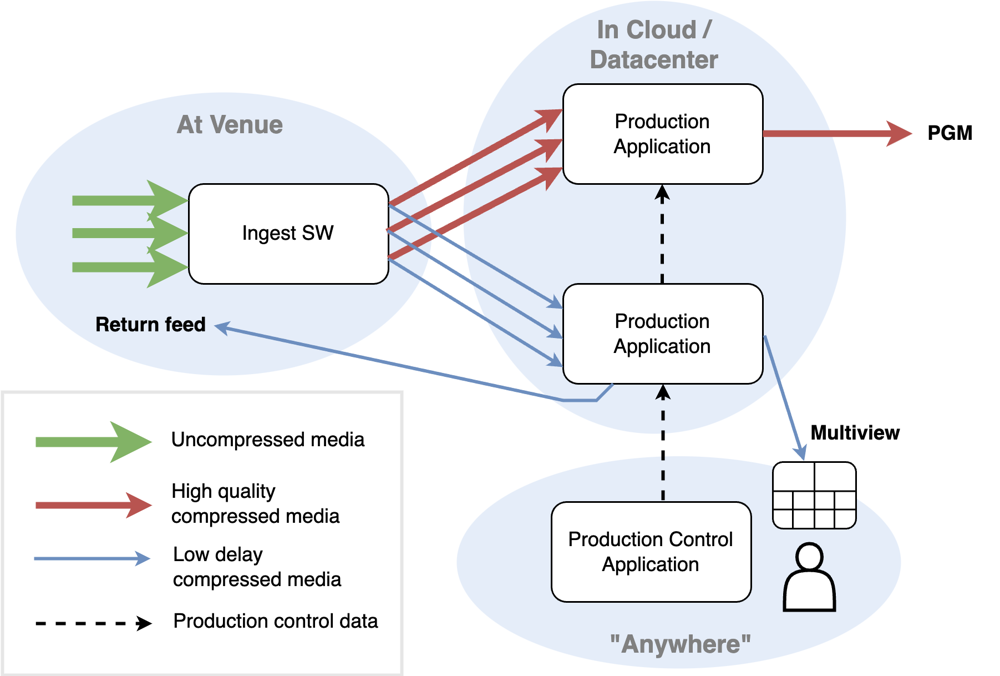

System overview

Agile Live components

Media sources, such as video cameras and microphones, are connected to a computer called Ingest. In practice, this will usually be some kind of off-the-shelf desktop computer, potentially equipped with a frame grabber card to allow physical input interfaces such as SDI cables, but it could also be a smartphone with a dedicated app using the built-in camera.

The Ingest is responsible for receiving the media data from the source, encode it and transport it using a network to the production environment inside the cloud/datacenter.

From the datacenter the remote production team can get multi-view streams of the available media sources to base their production decisions on, as well as a low-delay feedback display showing the result of the production.

The production in the datacenter will also output a high quality program output, which is broadcast to the viewers. Both the multi-views and the feedback display will usually have a lower quality compared to the high quality program output that is broadcast to the viewers. This is to allow an as short as possible end-to-end delay between what happens in front of the cameras in the studio and the output stream on the feedback display.

This in turn allows for feedback loops back to the studio, for instance for video cameras that are remotely controlled by the production team based on what they see on the multi-views, or having additional feedback displays in the studio.

2 - Introduction to Agile Live technology

Agile Live is a software-based platform and solution for live media production that enables remote and distributed workflows in software running on standard, off-the-shelf hardware. It can be deployed on-premises or in private or public cloud.

The system is built with an ‘API first’ philosophy throughout. It is built in several stacked conceptual layers, and a customer may choose how many layers to include in their solution, depending on which customisations they wish to do themselves. It is implemented in a distributed manner with a number of different software binaries the customer is responsible for running on their own hardware and operating system. If using all layers, the full solution provides media capture, synchronised contribution, video & audio mixing including Digital Video Effects and HTML-based graphics overlay, various production control panel integrations and a fully customisable multiview, primary distribution and a management & orchestration API layer with a configuration & monitoring GUI on top of it.

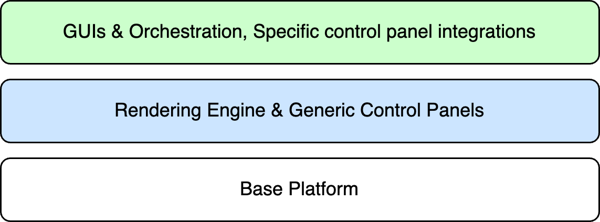

The layers of the platform are the “Base Layer”, the “Rendering Engine & Generic Control Panels” layer and the “GUIs & Orchestration, Specific Control Panels” layer.

Agile Live Layers

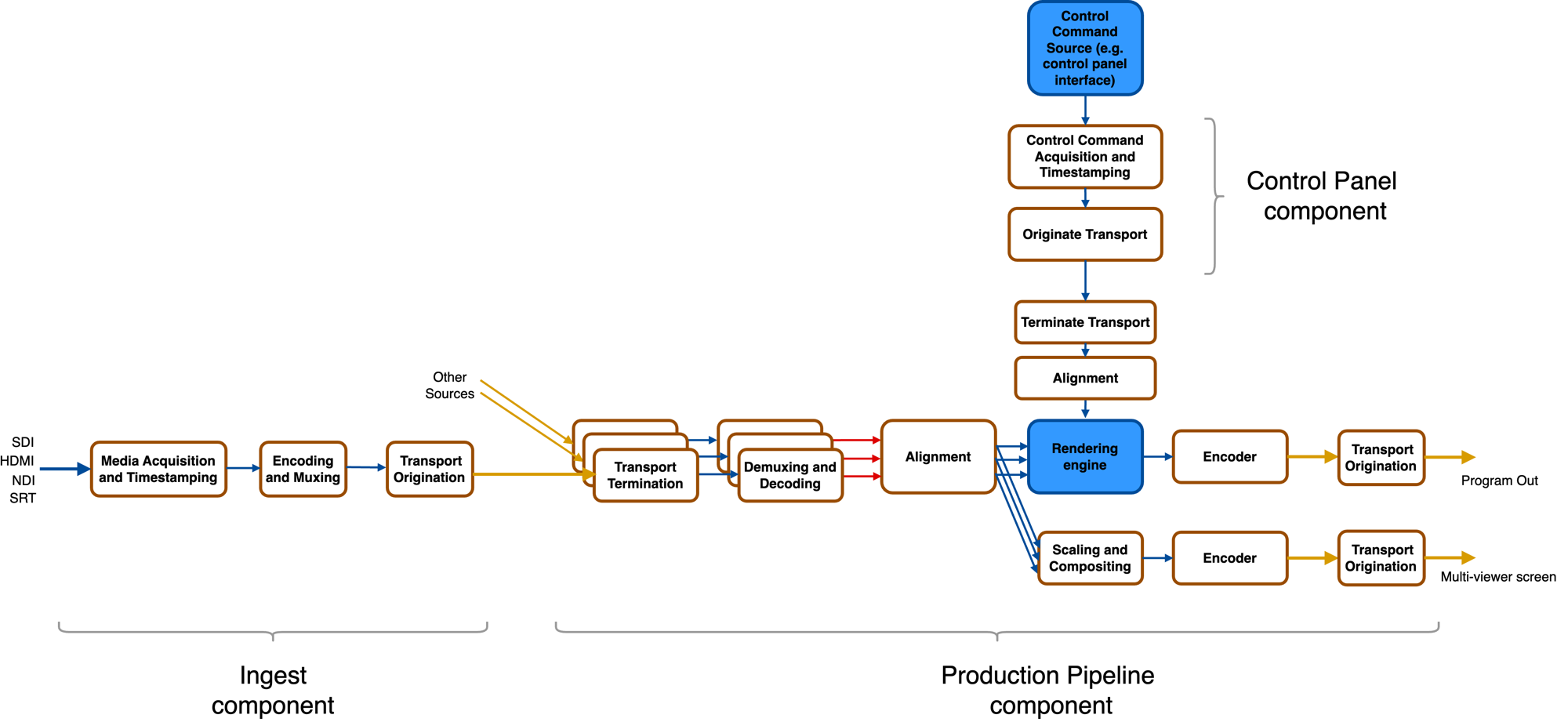

The base layer

The base layer of the platform can in a very simplified way be described as being responsible for transporting media of diverse types from many different locations into a central location in perfect sync, present it there to higher layer software that processes it in some way, and to distribute the end result. This piece of software, which is not a part of the base layer, is referred to as a “rendering engine”. The base layer also carries control commands from remote control panels into the rendering engine, without having any knowledge of the actual control command syntax.

The following image shows a schematic of the roles played by the base layer. The parts in blue are higher layer functionality.

Base Layer

The base layer is separated into three components based on their different roles in a distributed computing system:

- the Ingest component handles acquisition of the media sources and timestamping of media frames, followed by encoding, muxing and origination of reliable transport over unreliable networks using SRT or RIST

- the Production Pipeline component handles terminating all incoming media streams from ingests, demuxing and decoding, aligning all the sources according to timestamps and feeding them synchronously into the rendering engine. It also receives the resulting media frames from the rendering engine and encodes, muxes and streams out the media streams using MPEG-TS over UDP or SRT. It constructs a multiview from the aligned sources by fully customizable scaling and compositing. Finally, it receives control commands from the control panel component, aligns them in time with the media sources and feeds them into the rendering engine. NOTE that the rendering engine is NOT a part of the base layer, and must be supplied by a higher layer or by the implementer themselves.

- The control panel component is responsible for acquiring control command data (the internal syntax of which it has no knowledge of), timestamp it, and send it to the production pipeline component.

The base layer is delivered as libraries and header files, to be used to build binaries that make up the platform. The ingest component is for convenience also delivered as a precompiled binary named acl-ingest as there is very little customization to be done around it at the current moment.

The functionalities in the base layer are managed via a REST API. A separate software program called the System Controller acts as a REST service and communicates with the rest of the Base Layer software to control and monitor it. The REST API is Open API 2.0 compliant, and is documented here. Apart from the three components (ingest, production pipeline, controlpanel), the concepts of a Stream (transporting one source from one ingest to one production pipeline using one specific set of properties) and a controlconnection (transporting control commands from one control panel to one production pipeline or from one production pipeline to another) are central to the REST API.

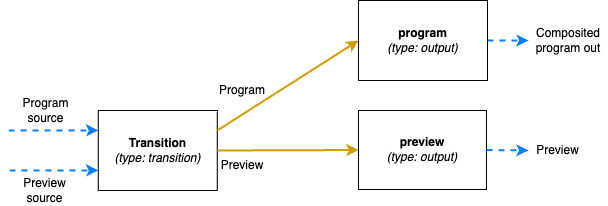

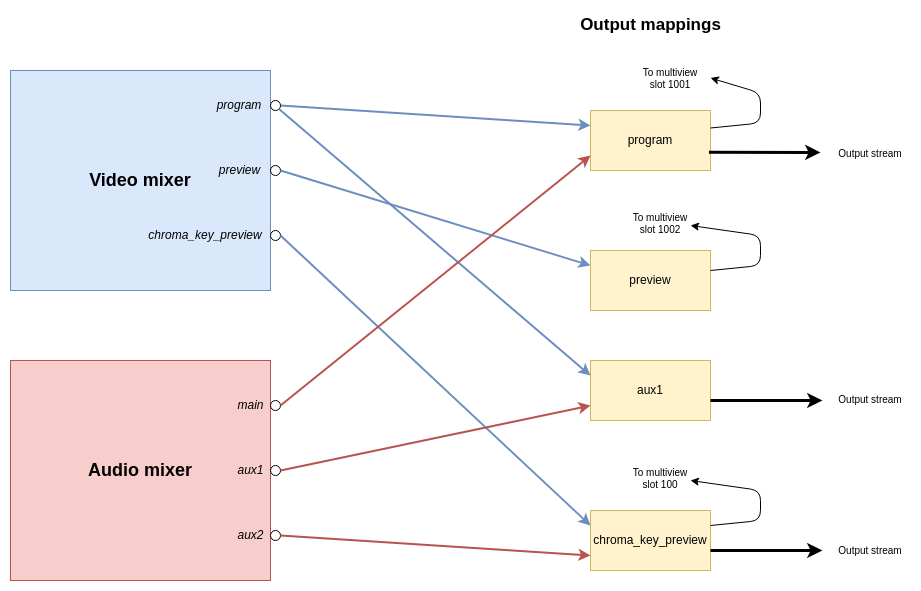

The rendering engine and generic control panels layer

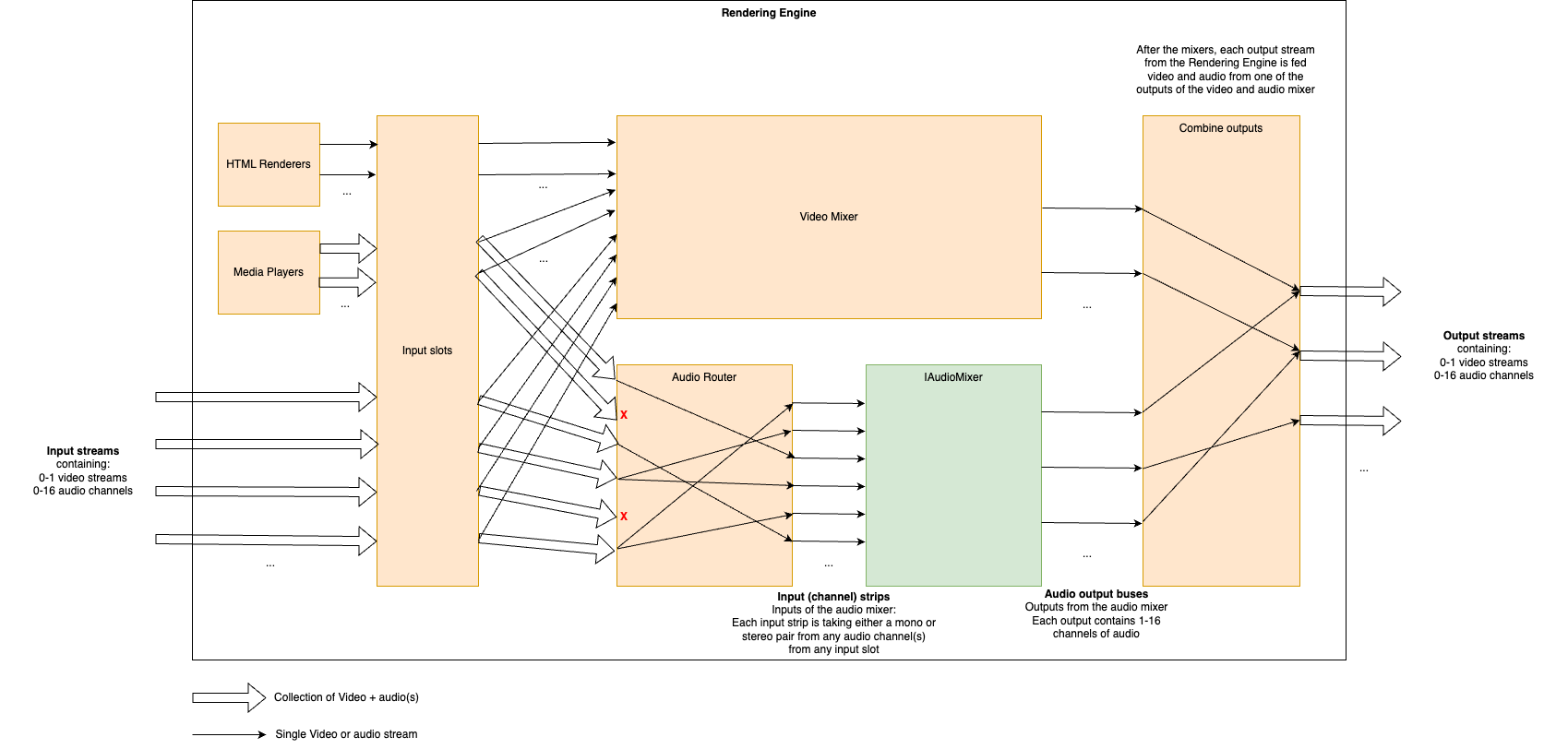

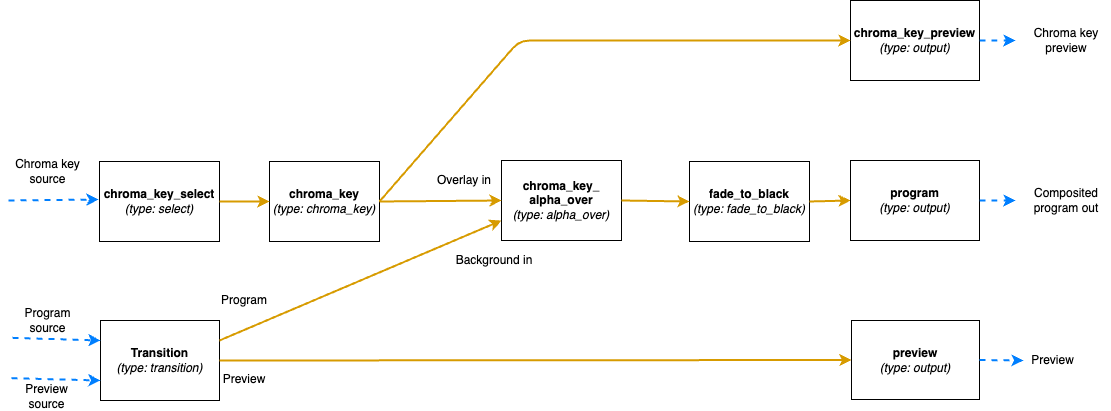

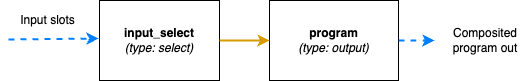

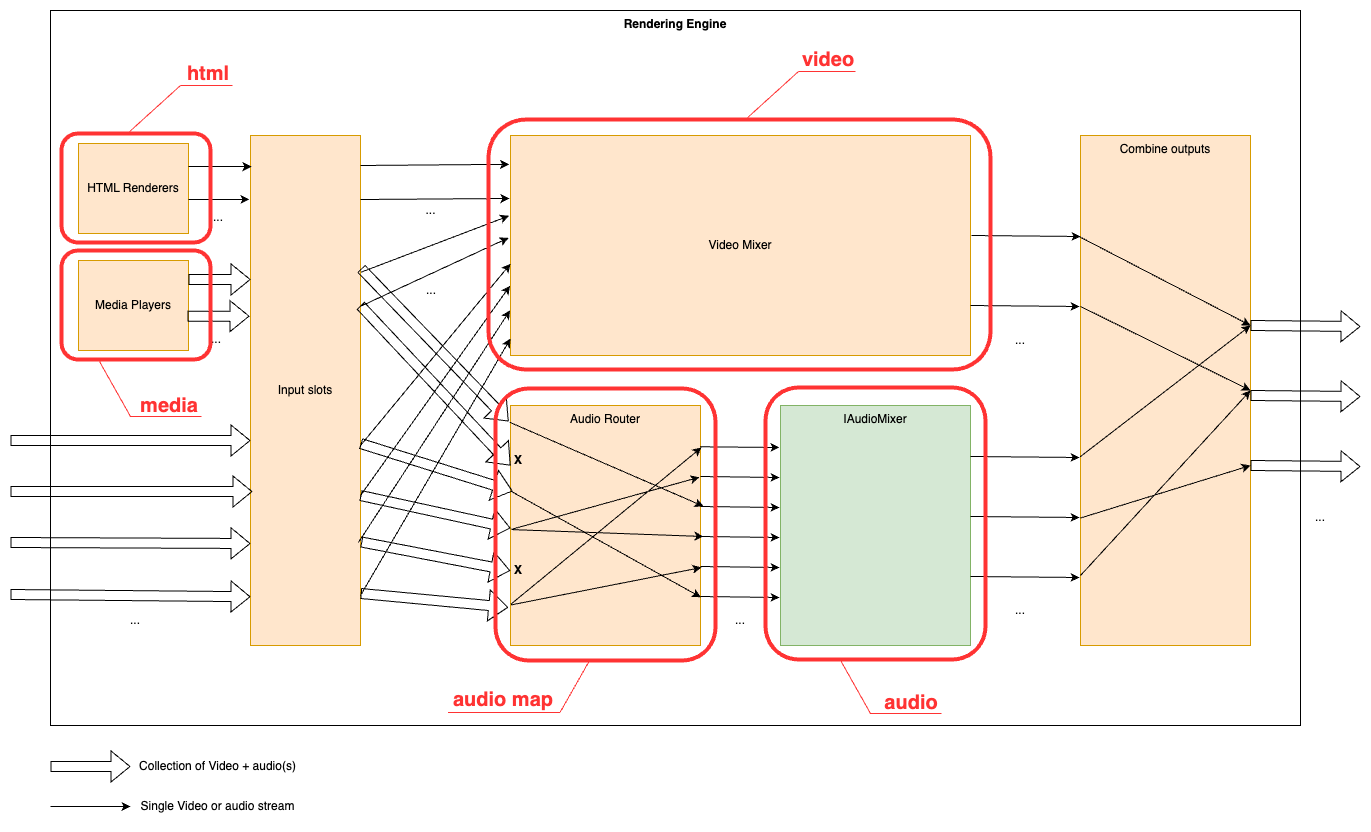

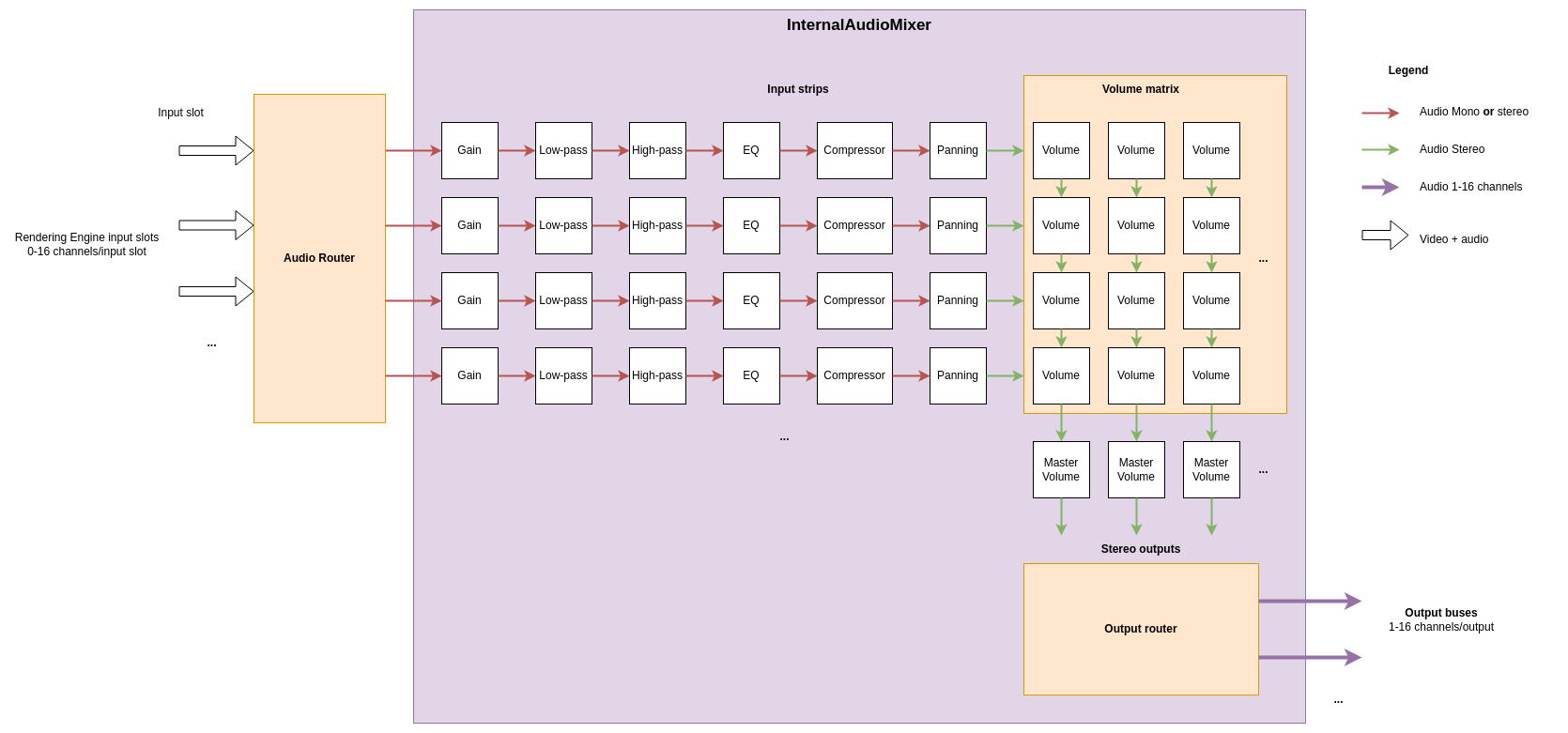

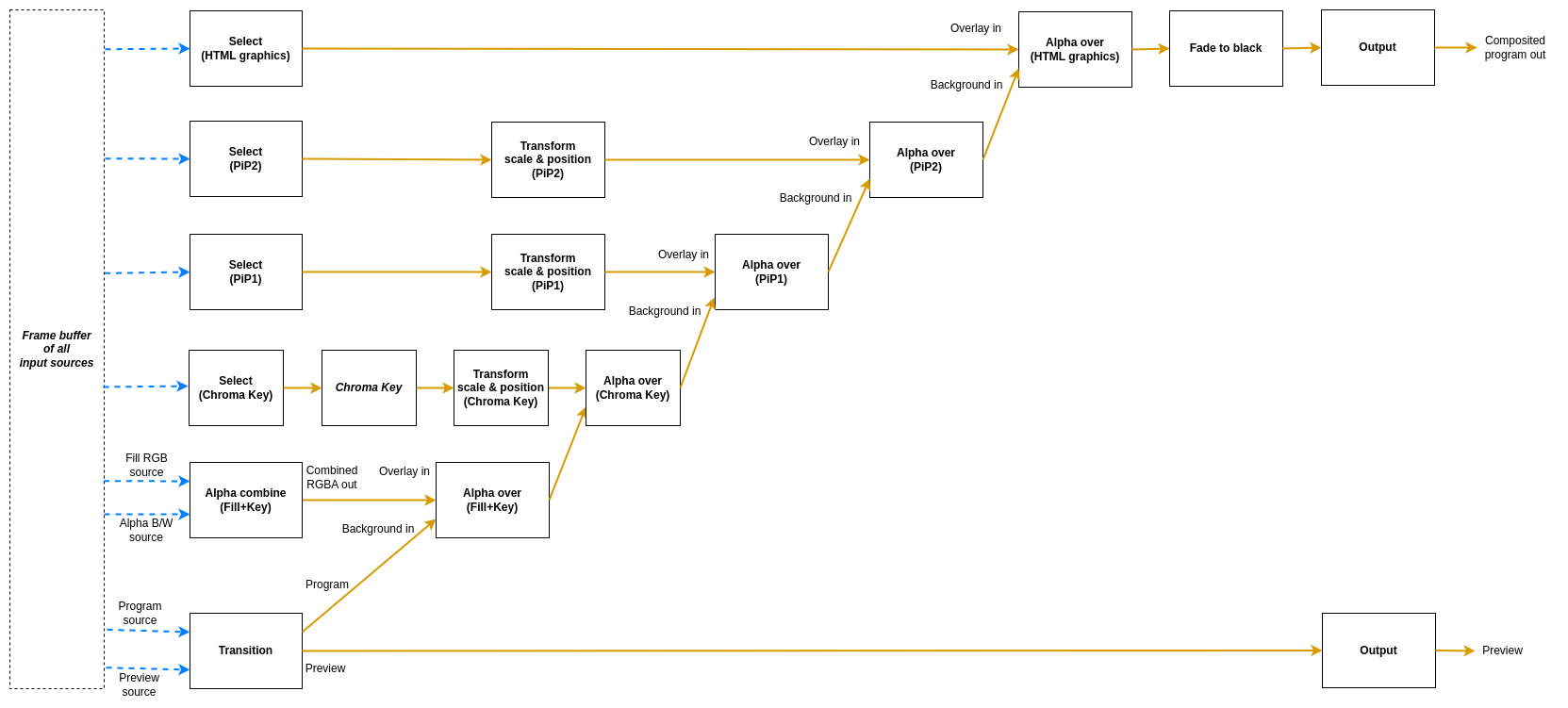

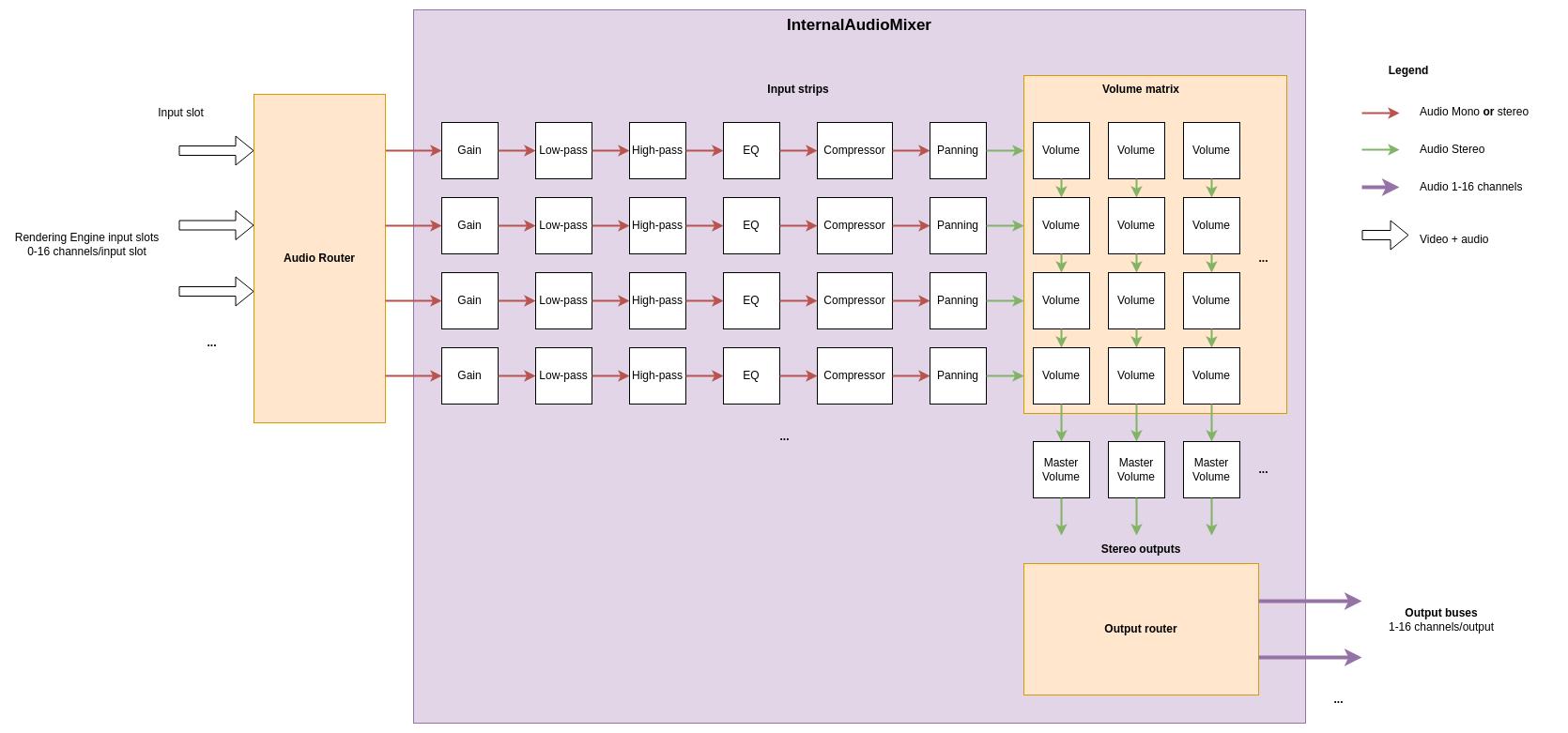

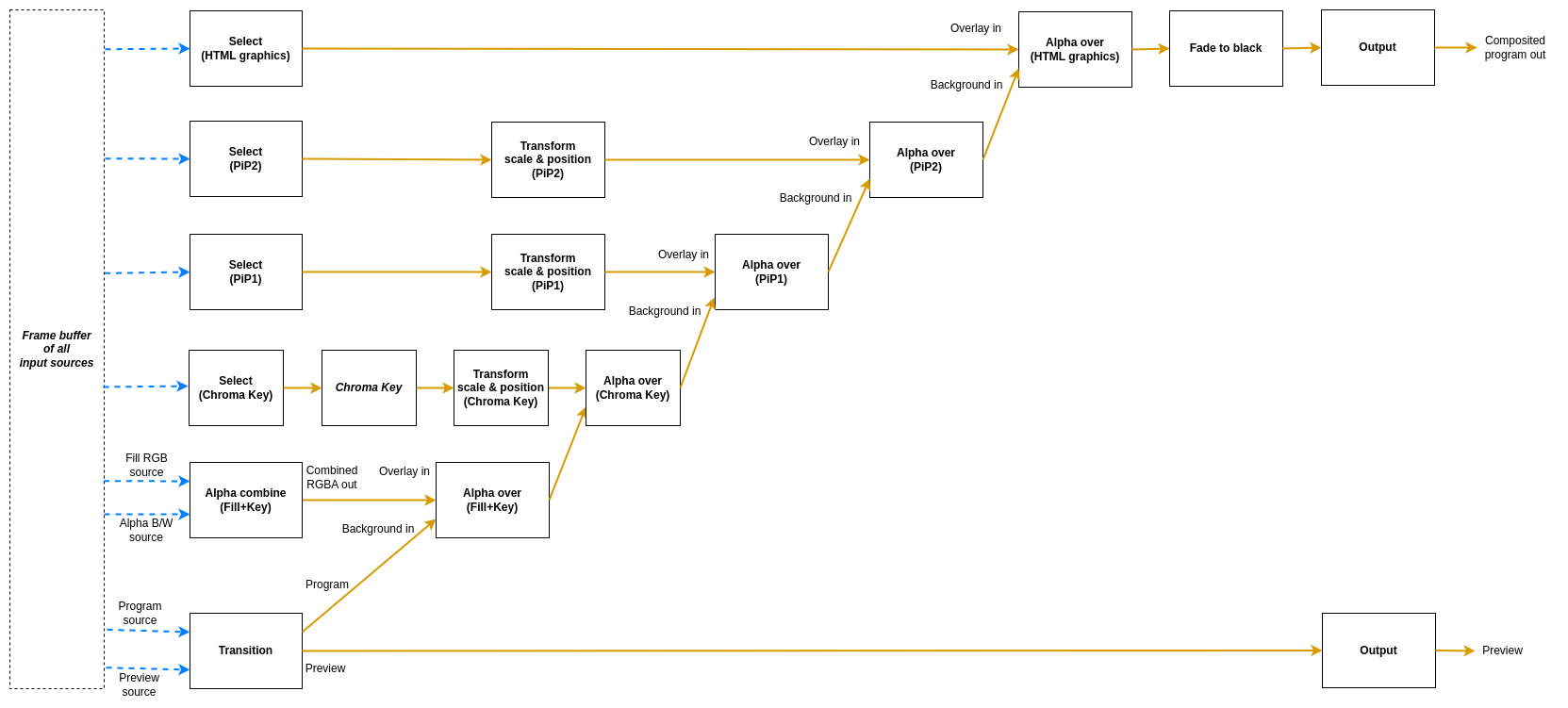

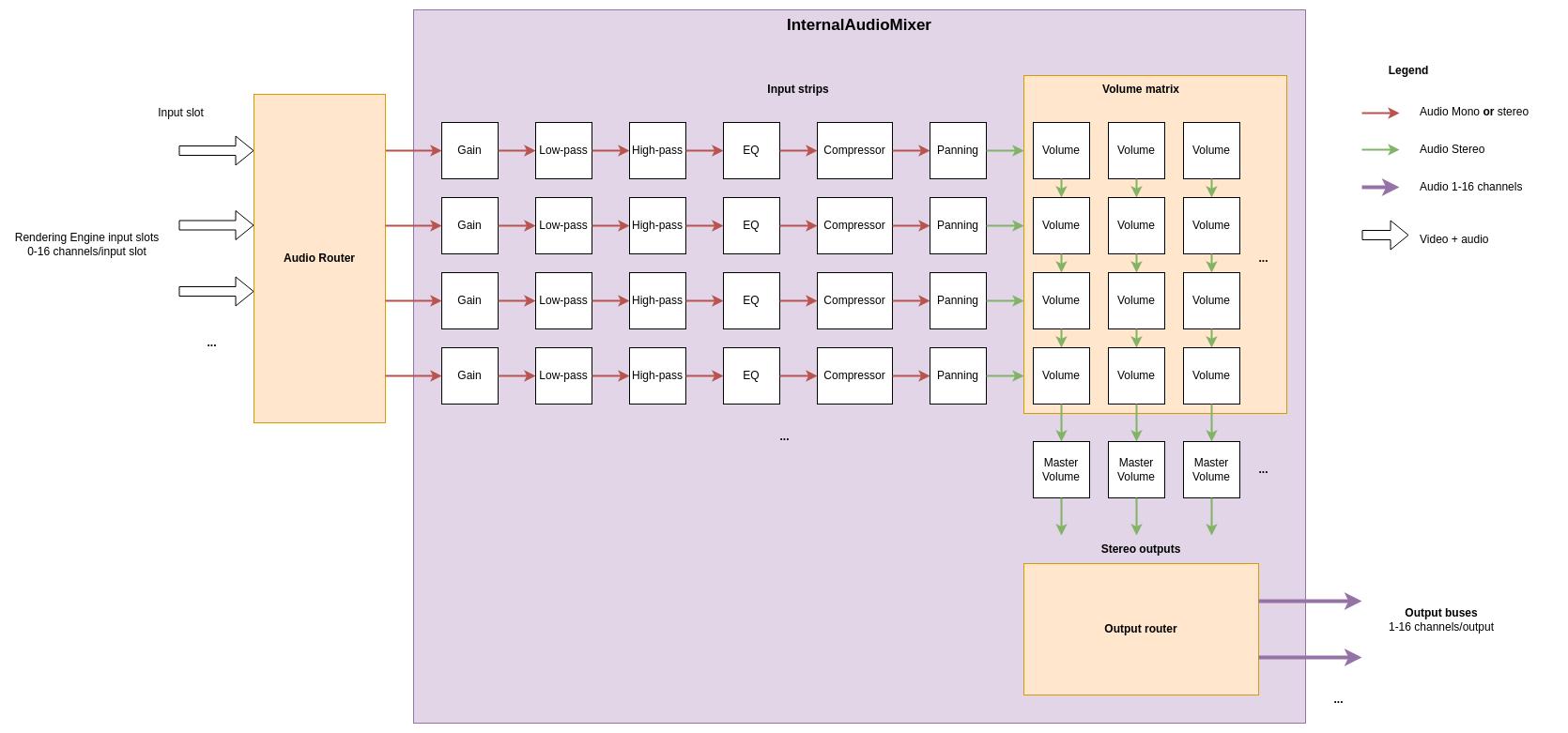

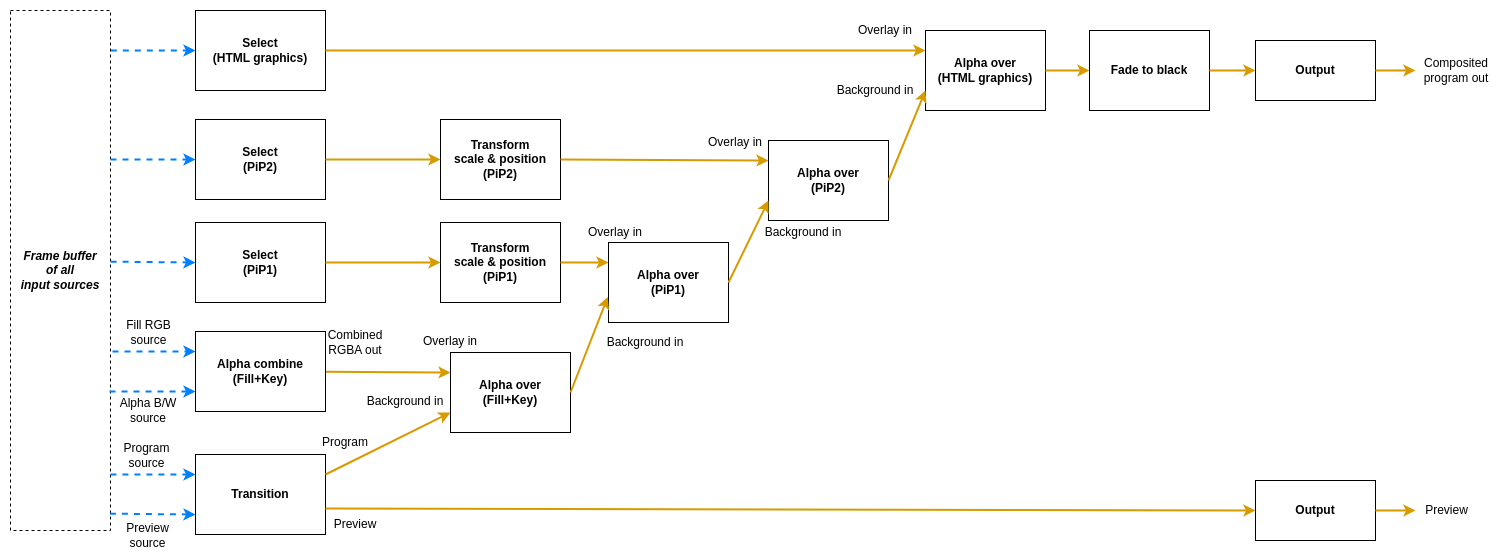

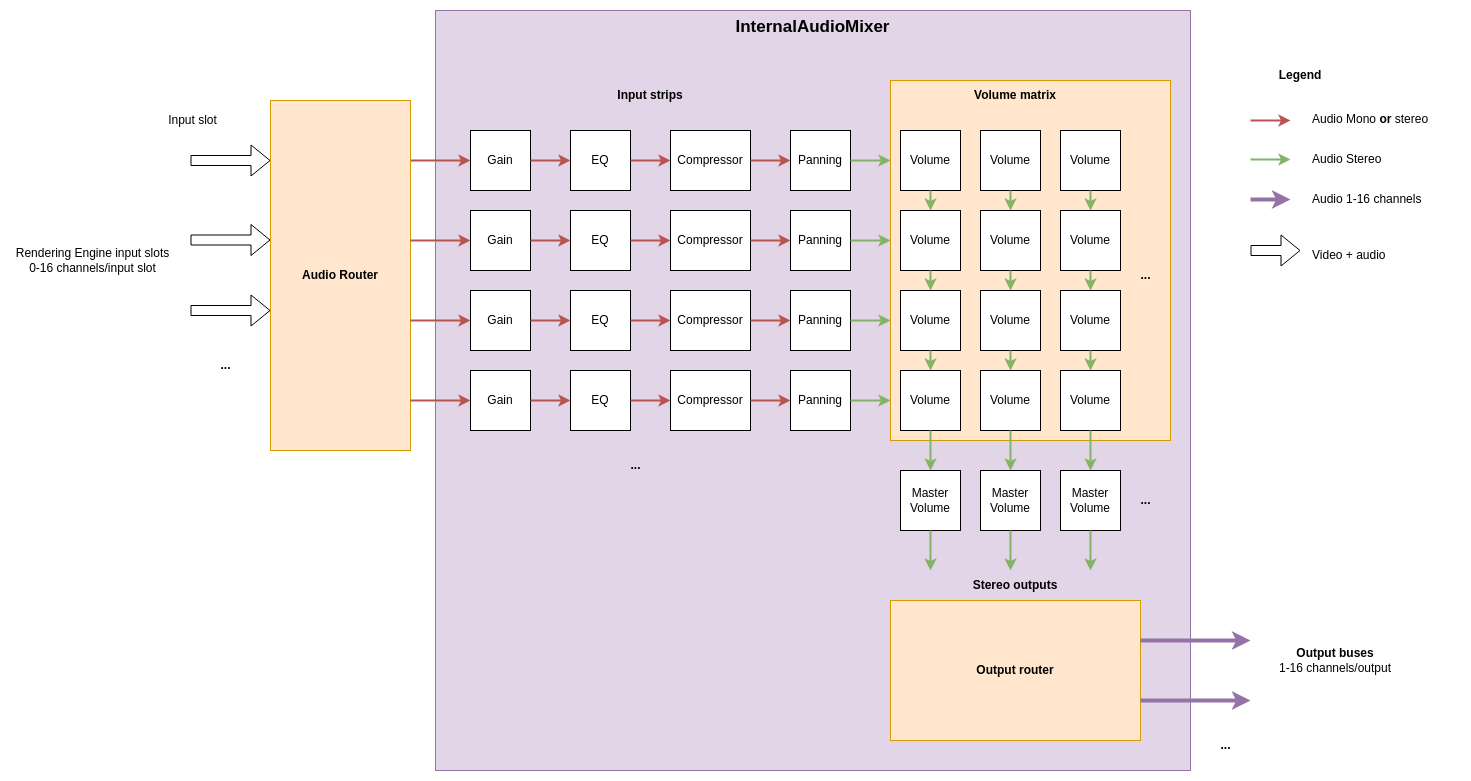

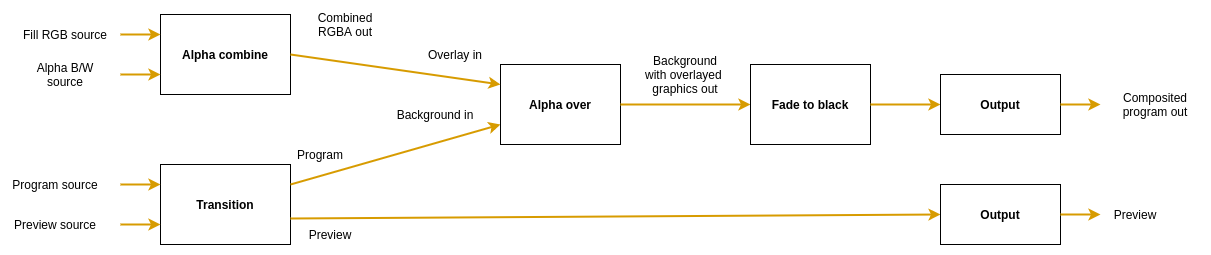

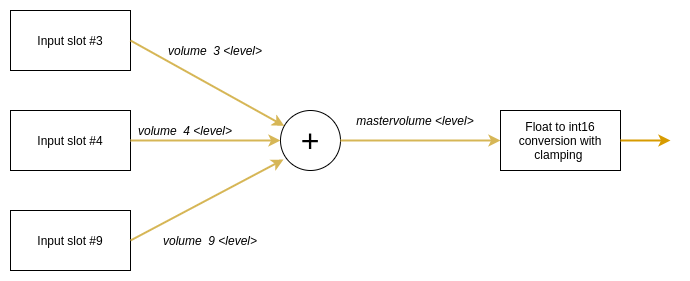

Though it is fully possible to use only the base layer and to build everything else yourself, a very capable version of a rendering engine is supplied. Our rendering engine implementation consists of a modular video mixer, an audio router and mixer, an interface towards 3rd party audio mixers, and an HTML renderer to overlay HTML-based graphics. It defines a control protocol for configuration and control which can be used by specific control panel implementations.

The rendering engine is delivered bundled with the production pipeline component as a binary named acl-renderingengine. This is because the production pipeline component from the base layer is only delivered as a library that the rendering engine implementation makes use of.

Communication with the rendering engine for configuration and control takes place over the control connections from control panels, as provided by the base layer. The control panels are contructed by using the control panel library, but to assist in getting up and running fast, a few generic control panels are provided, that will act as proxies for control commands. There is one that sets up a TCP server and accepts control commands over connections to it, and another one that acts as a websocket server for the same purpose. Finally there is one that takes commands over stdin from a terminal session forwards control commands.

The GUI and orchestration layer

On top of the REST API it is possible to build GUIs for configuring and monitoring the system. One default GUI is provided.

Prometheus-exporters can be configured to report on the details of the machines in the system, and there is also an exporter for the REST API statistics. The data in Prometheus can then be presented in Grafana.

Using the control command protocol defined by our rendering engine and either the generic control panels or custom control panel integrations, GUIs can be constructed to configure and control the components inside the rendering engine. For example, Bitfocus Companion can be configured to send control commands to the tcpcontrolpanel which will send them further into the system. The custom integration audio_control_gui can be used to interface with audio panels that support the Mackie Control protocol and to send control commands to the tcpcontrolpanel.

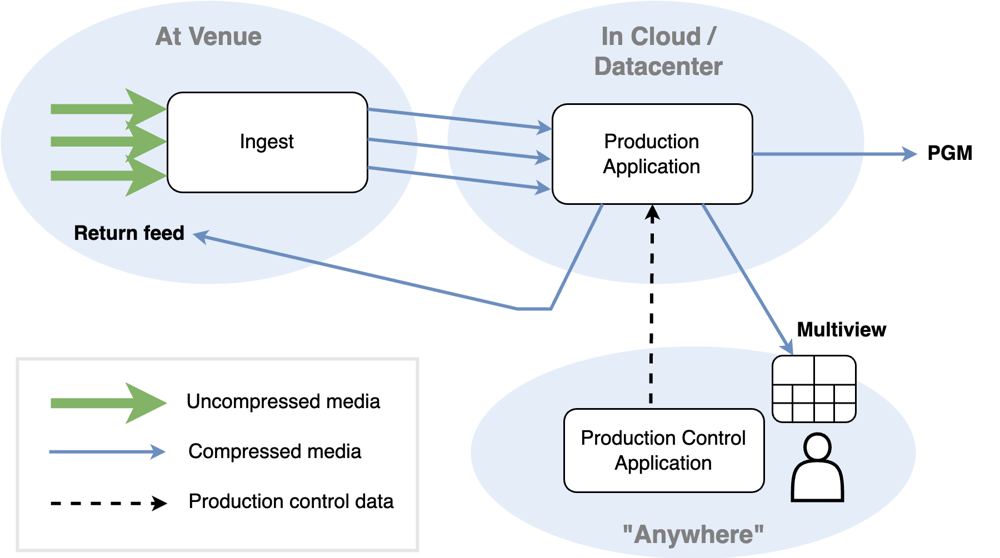

Direct editing mode vs proxy editing mode

The components of the Agile Live platform can be combined in many different ways. The two most common ways is the Direct Editing Mode and the Proxy Editing Mode. The direct editing mode is what has commonly been done for remote productions, where the mixer operator edits directly in the flow that goes out to the viewer.

Direct editing mode

In the proxy editing mode, the operator works with a low delay but lower quality flow, makes mixing decisions, and then those decisions are replicated in a frame accurate manner on a high quality flow that is delayed in time.

Proxy editing mode

There are good reasons for using a proxy editing mode. The mixer operator may be communicating via intercom with personnel at the venue, such as camera operators, or PTZ cameras may be operated remotely. In these cases it is important that the delay from the cameras to the production pipeline and out to the mixer operator is as short as possible. But having a low latency for this flow goes in direct opposition to having a high quality video compression and a very reliable transport. Therefore, in the proxy editing mode, we have both a low delay workflow and a high quality but slower workflow. This way both requirements can be satisfied. This technique is made possible by the synchronization functionalities of the base layer platform.

3 - Installation and configuration

A working knowledge of the technical basis of the Agile Live solution is beneficial before starting with this installation guide.

This guide will often point to subpages for the detailed description of steps. The major steps of this guide are:

- Hardware and operating system requirements

- Install, configure and start the System Controller

- The base platform components

- Install prerequisites

- Preparing the base system for installation

- Install Agile Live platform

- Configure and start the Agile Live components

- Install the configuration-and-monitoring GUI (optional)

- Install Prometheus and Grafana, and configure them (optional)

- Install and start control panel GUI examples

Warning

Some installation scripts described in this guide are using sudo to install software. As always when using sudo, make sure you understand what the scripts do before running them!1. Hardware and operating system requirements

The bulk of the platform runs on computer systems based on x86 CPUs and running the Ubuntu 22.04 operating system. This includes the following software components: the ingest, the production pipeline, the control panel integrations, and the system controller. The remaining components, i.e. the configuration and monitoring GUI, the control panel GUI examples, and the Prometheus database and Grafana frontend can be run in the platform of choice, as they are cross platform.

The platform does not have explicit hardware performance requirements, as it will utilize the performance of the system it is given, but suggestions for hardware specifications can be found here.

Agile Live is supported and tested on Ubuntu 22.04 LTS. Other versions may work but are not officially supported.

2. Install, configure and start the System Controller

The System Controller is the central backend that ties together and controls the different components of the base platform, and that provides the REST API that is used by the user to configure and monitor the system. When each Agile Live component, such as an Ingest or a Production Pipeline, starts up it connects to and registers with the System Controller, so it is a good idea to install and configure the System Controller first.

Instructuctions on how to install and configure it can be found here.

3. The base platform components

The base platform consists of the software components Ingests, Production Pipelines, and Control Panel interfaces. Each machine that is intended to act as an aquisition device for media shall run exactly one instance of the Ingest application, as it is responsible for interfacing with third party software such as SDKs for SDI cards and NDI.

The production pipeline application instance (interchangably called a rendering engine application instance in parts of this documentation) uses the resources of its host machine in a non-exclusive way (including the GPU), so there may therefore reside more than one instance on a single host machine.

The control panel integrations preferably reside on a machine close to the actual control panel.

However, in a very minimal system not all of these machines need to be separate; in fact, all the above could run on a single computer if so is desired.

A guide to installing and configuring the Agile Live base platform can be found here.

4. Install the configuration-and-monitoring GUI (optional)

There is a basic configuration-and-monitoring GUI that is intended to be used as an easy way to get going with using the system. It uses the REST API to configure and monitor the system.

Instructions for how to install it can be found here and a tutorial for how to use it can be found here

5. Install Prometheus and Grafana, and configure them (optional)

To collect useful system metrics for stability and performance monitoring, we advise to use Prometheus. For visualizing the metrics collected by Prometheus you could use Grafana.

The System controller receives a lot of internal metrics from the Agile Live components. These can be pulled by a Prometheus instance from the endpoint https://system-controller-host:port/metrics.

It is also possible to install external exporters for various hardware and OS metrics.

To install and run prometheus exporters on the servers that run ingest and production pipeline software, as well as installing, configuring and running the Prometheus database and the associated Grafana instance on the server dedicated to management and orchestration, follow this guide.

6. Install and start control panel GUI examples

To install the example control panel GUIs that are delivered as source code, follow this guide.

3.1 - Hardware examples

The following hardware specifications serve as examples of how much can be expected from certain hardware setups. The maximum number of inputs and outputs are highly dependent on the quality and resolution settings chosen when setting up a production, so these numbers shall be seen as approximations of what can be expected from a certain hardware under some form of normal use.

Hardware example #1

| System Specifications | |

|---|---|

| CPU | Intel Core i7 12700K |

| RAM | Kingston FURY Beast 32GB (2x16GB) 3200MHz CL16 DDR4 |

| Graphics Card | NVIDIA RTX A2000 12GB |

| PSU | 750W PSU |

| Motherboard | ASUS Prime H670-Plus D4 DDR4 S-1700 ATX |

| SSD | Samsung 980 NVMe M.2 1TB SSD |

| Capture Card (Ingest only) | Blackmagic Design Decklink Duo 2 |

One such server is capable of fulfilling either of the two use-cases below:

- Ingest server

- With Proxy editing: ingesting 4 sources (encoded as 4 Low Delay streams, AVC 720p50 1 Mbps and 4 High Quality streams, HEVC 1080p50 25 Mbps).

- With Direct editing: ingesting 6 sources, (HEVC 1080p50 25 Mbps).

- Production server, running both a High Quality and a Low Delay pipeline with at least 10 connected sources (i.e. at least 20 incoming streams), with at least one 1080p50 multi-view output, one 1080p High Quality program output and one 720p Low Delay output.

Hardware example #2

| System Specifications | |

|---|---|

| CPU | Intel Core i7 12700K |

| RAM | Kingston FURY Beast 32GB (2x16GB) 6000MHz CL36 DDR5 |

| Graphics Card | NVIDIA RTX 4000 SFF Ada Generation 20GB |

| PSU | 750W PSU |

| Motherboard | ASUS ROG Maximus Z690 Hero DDR5 ATX |

| SSD | WD Blue SN570 M.2 1TB SSD |

| Capture Card (Ingest only) | Blackmagic Design Decklink Quad 2 |

One such server is capable of fulfilling either of the two use-cases below:

- Ingest server

- With Proxy editing: ingesting 6-8 sources (encoded as 6-8 Low Delay streams, AVC 720p50 1 Mbps and 6-8 High Quality streams, HEVC 1080p50 25 Mbps).

- With Direct editing: ingesting 8-10 sources (HEVC 1080p50 25 Mbps).

- Production server, running both the High Quality and the Low Delay pipeline with at least 20 connected sources (i.e. at least 40 incoming streams), with at least three 4K multi-view outputs, four 1080p High Quality outputs and four 720p Low Delay outputs.

3.2 - The System Controller

The System Controller is the central backend that ties together and controls the different components of the base platform, and that provides the REST API that is used by the user to configure and monitor the system.

When each Agile Live component (such as an Ingest or a Production Pipeline) starts up, it connects to and registers with the System Controller. This means the System Controller must run in a network location that is reachable from all other components.

From release 6.0.0 and on, the System Controller is distributed as a debian package, acl-system-controller.

To install the System Controller application, do the following:

sudo apt -y install acl-system-controller_<version>_amd64.deb

The System Controller binary will be installed in the directory /opt/agile_live/bin/ and its configuration file will be placed in /etc/opt/agile_live/.

Note

In releases 5.0.0 and earlier, the System Controller application was delivered in the release artifactagile-live-system-controller-<x.y.z>-<checksum>.zip. Unpack the file and place the contents in a desired location.

An example configuration file is shipped with this release, postfixed with .example. Remove this postfix to use the file.The System Controller is configured either using a settings file or using environment variables, or a combination thereof. The settings file, named acl_sc_settings.json, is searched for in /etc/opt/agile_live and current directory, or the search path can be pointed to using a flag on the command line when starting the application:

./acl-system-controller for default search paths or ./acl-system-controller -settings-path /path/to/directory/where/settings_file/exists

To use environment variables overriding settings from the configuration file, construct the variables from the json objects in the settings file by prepending with ACL_SC and then the json object flattened out with _ as the delimiter, e.g.:

export ACL_SC_LOGGER_LEVEL=debug

export ACL_SC_PROTO=https

Starting with the basic configuration file in the release, edit a few settings inside it (see this guide for help with the security related parameters):

- change the

pskparameter to something random of your own - change the

saltto some long string of your own - set the

usernameandpasswordto protect the REST API - specify TLS certificate and key files

- set the

hostandportparameters to where the System Controller shall be reached

Also consider setting the file_name_prefix to set the log file location, otherwise it defaults to /tmp/.

Warning

Since passwords will be inserted in this configuration file, it is recommended to set the file rights to only allow reading by the user that executes the System Controller.From the installation directory, the System Controller is started with:

./acl-system-controller

If started successfully, it will display a log message showing the server endpoint.

The System Controller comes with built-in Swagger documentation that makes it easy to explore the API in a regular web browser. To access the Swagger REST API documentation hosted by the System Controller, open https://<IP>:<port>/swagger/ in your browser. The Swagger UI can be used to poll or edit the state of the actual system by using the “Try it out” buttons.

Running the System Controller as a service

It may be useful to run the System Controller as a service in Ubuntu, so that it starts automatically on boot and restarts if it terminates abnormally. In Ubuntu 22.04 systemd manages the services. Here follows an example of how a service can be configured, started and monitored.

First create a “service unit file”, named for example /etc/systemd/system/acl-system-controller.service and put the following in it:

[Unit]

Description=Agile Live System Controller service

[Service]

User=<YOUR_USER>

Environment=<ANY_ENV_VARS_HERE>

ExecStart=/opt/agile_live/bin/acl-system-controller

Restart=always

[Install]

WantedBy=multi-user.target

Replace <YOUR_USER> with the user that will be executing the System Controller binary on the host machine. The Environment attribute is used if you want to set any parameters as environment variables.

After saving the service unit file, run:

sudo systemctl daemon-reload

sudo systemctl enable acl-system-controller.service

sudo systemctl start acl-system-controller

The service should now be started. The status of the service can be queried using:

sudo systemctl status acl-system-controller.service

3.3 - The base platform

This page describes the steps needed to install and configure the base platform.

Install Third-party packages

Agile Live has a few dependencies on third party software that have to be installed separately first. Which ones are needed depends on the component of Agile Live that you will be running on the specific system.

A machine running the Ingest needs the following:

- CUDA Toolkit

- oneVPL

- Blackmagic Desktop Video (Optional, is needed when using Blackmagic DeckLink SDI cards)

- NDI SDK for Linux

- Base packages via ‘apt’

A machine running the Rendering Engine only needs a subset:

- CUDA Toolkit

- Base packages via ‘apt’

A machine running control panels needs yet fewer:

- Base packages via ‘apt’

Hint

For ease of use during the installation instructions below, for each commands section it is suggested to copy the contents, place them in a shell script, and run that shell script.CUDA Toolkit

For video encoding and decoding on NVidia GPUs you need Nvidia CUDA Toolkit installed. It must be installed on Ingest machines even if they don’t have Nvidia cards.

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.1-1_all.deb

sudo dpkg -i cuda-keyring_1.1-1_all.deb

sudo apt update

sudo apt -y install cuda

You need to reboot the system after installation. Then check the installation by running nvidia-smi. If installing more dependencies, it is ok to proceed with that and reboot once after installing all parts.

oneVPL

All machines running the Ingest application need the Intel oneAPI Video Processing Library (oneVPL) installed. It is used for encoding and thumbnail processing on Intel GPUs and is required even for Ingests which will not use the Intel GPU for encoding. Install oneVPL by running:

sudo apt install -y gpg-agent wget

# Download and run oneVPL offline installer:

# https://www.intel.com/content/www/us/en/developer/articles/tool/oneapi-standalone-components.html#onevpl

VPL_INSTALLER=l_oneVPL_p_2023.1.0.43488_offline.sh

wget https://registrationcenter-download.intel.com/akdlm/IRC_NAS/e2f5aca0-c787-4e1d-a233-92a6b3c0c3f2/$VPL_INSTALLER

chmod +x $VPL_INSTALLER

sudo ./$VPL_INSTALLER -a -s --eula accept

# Install the latest general purpose GPU (GPGPU) run-time and development software packages

# https://dgpu-docs.intel.com/driver/client/overview.html

wget -qO - https://repositories.intel.com/graphics/intel-graphics.key | \

sudo gpg --dearmor --output /usr/share/keyrings/intel-graphics.gpg

echo 'deb [arch=amd64 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/graphics/ubuntu jammy arc' | \

sudo tee /etc/apt/sources.list.d/intel.gpu.jammy.list

sudo apt update

sudo apt install -y ffmpeg intel-media-va-driver-non-free libdrm2 libmfx-gen1.2 libva2

# Add VA environment variables

if [[ -z "${LIBVA_DRIVERS_PATH}" ]]; then

sudo sh -c "echo 'LIBVA_DRIVERS_PATH=/usr/lib/x86_64-linux-gnu/dri' >> /etc/environment"

fi

if [[ -z "${LIBVA_DRIVER_NAME}" ]]; then

sudo sh -c "echo 'LIBVA_DRIVER_NAME=iHD' >> /etc/environment"

fi

# Give users access to the GPU device for compute and render node workloads

# https://www.intel.com/content/www/us/en/develop/documentation/installation-guide-for-intel-oneapi-toolkits-linux/top/prerequisites/install-intel-gpu-drivers.html

sudo usermod -aG video,render ${USER}

# Set environment variables for development

echo "# oneAPI environment variables for development" >> ~/.bashrc

echo "source /opt/intel/oneapi/setvars.sh > /dev/null" >> ~/.bashrc

You need to reboot the system after installation. If installing more dependencies, it is ok to proceed with that and reboot once after installing all parts.

Support for Blackmagic Design SDI grabber cards

If the Ingest shall be able to use Blackmagic DeckLink SDI cards, the drivers need to be installed.

Download https://www.blackmagicdesign.com/se/support/family/capture-and-playback (Desktop Video).

Unpack and install (Replace all commands with the version you downloaded):

sudo apt install dctrl-tools dkms

tar -xvf Blackmagic_Desktop_Video_Linux_12.7.tar.gz

cd Blackmagic_Desktop_Video_Linux_12.7/deb/x86_64

sudo dpkg -i desktopvideo_12.7a4_amd64.deb

Support for NDI sources

For machines running Ingests, you need to install the NDI library and additional dependencies:

## Install library

sudo apt -y install avahi-daemon avahi-discover avahi-utils libnss-mdns mdns-scan

wget https://downloads.ndi.tv/SDK/NDI_SDK_Linux/Install_NDI_SDK_v5_Linux.tar.gz

tar -xvzf Install_NDI_SDK_v5_Linux.tar.gz

PAGER=skip-pager ./Install_NDI_SDK_v5_Linux.sh <<< y

cd NDI\ SDK\ for\ Linux/lib/x86_64-linux-gnu/

NDI_LIBRARY_FILE=$(ls libndi.so.5.*.*)

cd ../../..

sudo cp NDI\ SDK\ for\ Linux/lib/x86_64-linux-gnu/${NDI_LIBRARY_FILE} /usr/lib/x86_64-linux-gnu

sudo ln -fs /usr/lib/x86_64-linux-gnu/${NDI_LIBRARY_FILE} /usr/lib/x86_64-linux-gnu/libndi.so

sudo ln -fs /usr/lib/x86_64-linux-gnu/${NDI_LIBRARY_FILE} /usr/lib/x86_64-linux-gnu/libndi.so.5

sudo ldconfig

sudo cp NDI\ SDK\ for\ Linux/include/* /usr/include/.

Base packages installed via ‘apt’

When installing version 5.0.0 or earlier, please refer to this guide for this step.

All systems that run an Ingest, Rendering Engine or Control Panel need chrony as NTP client.

The systemd-coredump package is also really handy to have installed to be able to debug core dumps later on, but is technically optional.

These packages can be installed with the apt package manager:

sudo apt -y update && sudo apt -y upgrade && sudo apt -y install chrony systemd-coredump

Preparing the base system for installation

To prepare the system for Agile Live, the NTP client must be configured correctly by following this guide, and some general Linux settings shall be configured by following this guide. Also, CUDA should be prevented from automatically installing upgrades by following this guide.

Installing the Agile Live components

When installing version 5.0.0 or earlier, please refer to this guide for this step.

The following Agile Live .deb packages are distributed:

- acl-system-controller - Contains the System Controller application (explained in separate section)

- acl-libingest - Contains the library used by the Ingest application

- acl-ingest - Contains the Ingest application

- acl-libproductionpipeline - Contains the libraries used by the Rendering Engine application

- acl-renderingengine - Contains Agile Live’s default Rendering Engine application

- acl-libcontroldatasender - Contains the libraries used by Control Panel applications

- acl-controlpanels - Contains the generic Control Panel applications

- acl-dev - Contains the header files required for development of custom Control Panels and Rendering Engines

All binaries will be installed in the directory /opt/agile_live/bin. Libraries are installed in /opt/agile_live/lib. Header files are installed in /opt/agile_live/include.

Ingest

To install the Ingest application, install the following .deb-packages:

sudo apt -y install ./acl-ingest_<version>_amd64.deb ./acl-libingest_<version>_amd64.deb

Rendering engine

To install the Rendering Engine application, install the following .deb-packages:

sudo apt -y install ./acl-renderingengine_<version>_amd64.deb ./acl-libproductionpipeline_<version>_amd64.deb

Control panels

To install the generic Control Panel applications acl-manualcontrolpanel, acl-tcpcontrolpanel and acl-websocketcontrolpanel, install the following .deb-packages:

sudo apt -y install ./acl-controlpanels_<version>_amd64.deb ./acl-libcontroldatasender_<version>_amd64.deb

Dev

To install the acl-dev package containing the header files needed for building your own applications, install the following .deb-package:

sudo apt -y install ./acl-dev_<version>_amd64.deb

Configuring and starting the Ingest(s)

The Ingest component is a binary which ingests raw video input sources such as SDI or NDI, timestamps, processes, encodes and originates transport to the rest of the platform.

Settings for the ingest binary are added as environment variables. There is one setting that must be set:

ACL_SYSTEM_CONTROLLER_PSKshall be set to the same as in the settings file of the System Controller

Also, there are some parameters that have default settings that you will most probably want to override:

ACL_SYSTEM_CONTROLLER_IPandACL_SYSTEM_CONTROLLER_PORTshall be set to the location where the System Controller is hostedACL_INGEST_NAMEis optional but is well used as an aid to identify the Ingest in the REST interface if multiple instances are connected to the same System Controller

You may also want to set the log location by setting ACL_LOG_PATH. The log location defaults to /tmp/.

Hint

In case you forget the names of these environment variables or want to see their default values, run the component with the--help flag, e.g. ./acl-ingest --help.As an example, from the installation directory, the Ingest is started with:

ACL_SYSTEM_CONTROLLER_IP="10.10.10.10" ACL_SYSTEM_CONTROLLER_PORT=8080 ACL_SYSTEM_CONTROLLER_PSK="FBCZeWB3kMj5me58jmcpmEd3bcPZbp4q" ACL_INGEST_NAME="My Ingest" ./acl-ingest

If started successfully, it will display a log message showing that the Ingest is up and running.

Running the Ingest SW as a service

It may be useful to run the Ingest SW as a service in Ubuntu, so that it starts automatically on boot and restarts if it terminates abnormally. In Ubuntu 22.04 systemd manages the services. Here follows an example of how a service can be configured, started and monitored.

First create a “service unit file”, named for example /etc/systemd/system/acl-ingest.service and put the following in it:

[Unit]

Description=Agile Live Ingest service

[Service]

User=<YOUR_USER>

Environment=ACL_SYSTEM_CONTROLLER_PSK=<YOUR_PSK>

ExecStart=/bin/bash -c '\

source /opt/intel/oneapi/setvars.sh; \

exec /opt/agile_live/bin/acl-ingest'

Restart=always

[Install]

WantedBy=multi-user.target

Replace <YOUR_USER> with the user that will be executing the Ingest binary on the host machine. The Environment attribute should be set in the same way as described above for manually starting the binary.

After saving the service unit file, run:

sudo systemctl daemon-reload

sudo systemctl enable acl-ingest.service

sudo systemctl start acl-ingest

The service should now be started. The status of the service can be queried using:

sudo systemctl status acl-ingest.service

Configuring and starting the Production pipeline(s) including rendering engine

The production pipeline component and the rendering engine component are combined into one binary named acl-renderingengine and terminates transport of media streams from ingests, demuxes, decodes, aligns according to timestamps and clock sync, separately mixes audio and video (including HTML-based graphics insertion) and finally encodes, muxes and originates transport of finished mixed outputs as well as multiviews.

It is possible to run just one production pipeline if doing direct editing (the “traditional way”, in the stream) or to use two pipelines in order to set up a proxy editing workflow. In the latter case, there is one designated for the Low Delay flow which will be used for quick feedbacks on the editing commands and one designated for High Quality flows which will produce the final quality stream to broadcast to the viewers. The Production Pipeline is simply called a “pipeline” in the REST API. The rendering engine is not managed through the REST API but rather through the control command API from the control panels.

Settings for the production pipeline binary are added as environment variables. There is one setting that must be set:

ACL_SYSTEM_CONTROLLER_PSKshall be set to the same as in the settings file of the System Controller

Also, there are some parameters that have default settings that you will most probably want to override:

ACL_SYSTEM_CONTROLLER_IPandACL_SYSTEM_CONTROLLER_PORTshall be set to the location where the System Controller is hostedACL_RENDERING_ENGINE_NAMEis needed to identify the different production pipelines in the REST API and shall thus be set uniquely and descriptivelyACL_MY_PUBLIC_IPshall be set to the IP address or hostname where the ingest shall find the production pipeline. If the production pipeline is behind a NAT or needs DNS resolving, setting this variable is necessaryDISPLAYis needed but may already be set by the operating system. If not (because the server is headless) it needs to be set.

Hint

If running a headless server and setting theDISPLAY environment variable, run an instance of Xvfb to provide the virtual “display” to point towards. E.g. start Xvfb by running Xvfb -ac :99 -screen 0 1280x1024x16, and when starting the Rendering Engine set DISPLAY=:99. It is recommended to start Xvfb as a service on headless machines.You may also want to set the log location by setting ACL_LOG_PATH. The log location defaults to /tmp/.

Here is an example of how to start two instances (one for Low Delay flow and one for High Quality Flow) of the acl-renderingengine application and name them accordingly so that you and the GUI can tell them apart. First start the Low Delay instance by running:

ACL_SYSTEM_CONTROLLER_IP="10.10.10.10" ACL_SYSTEM_CONTROLLER_PORT=8080 ACL_SYSTEM_CONTROLLER_PSK="FBCZeWB3kMj5me58jmcpmEd3bcPZbp4q" ACL_RENDERING_ENGINE_NAME="My LD Pipeline" ./acl-renderingengine

Then start the High Quality Rendering Engine in the same way:

ACL_SYSTEM_CONTROLLER_IP="10.10.10.10" ACL_SYSTEM_CONTROLLER_PORT=8080 ACL_SYSTEM_CONTROLLER_PSK="FBCZeWB3kMj5me58jmcpmEd3bcPZbp4q" ACL_RENDERING_ENGINE_NAME="My HQ Pipeline" ./acl-renderingengine

Configuring and starting the generic Control Panels

TCP control panel

acl-tcpcontrolpanel is a control panel application that starts a TCP server on a configurable set of endpoints and relays control commands over a control connection if one has been set up. It is preferably run geographically very close to the control panel whose control commands it will relay, but can alternatively run close to the production pipeline that it is connected to. To start with, it is easiest to run it on the same machine as the associated production pipeline.

Settings for the tcp control panel binary are added as environment variables. There is one setting that must be set:

ACL_SYSTEM_CONTROLLER_PSKshall be set to the same as in the settings file of the System Controller

Also, there are some parameters that have default settings that you will most probably want to override:

ACL_SYSTEM_CONTROLLER_IPandACL_SYSTEM_CONTROLLER_PORTshall be set to the location where the System Controller is hostedACL_CONTROL_PANEL_NAMEcan be set to make it easier to identify the control panel through the REST APIACL_TCP_ENDPOINTfrom release 6.0.0 on, contains the IP address and port of the TCP endpoint to be opened, written as<ip>:>port>. Several clients can connect to the same listening port. In release 5.0.0 and earlier, instead useACL_TCP_ENDPOINTS, a comma-separated list of endpoints to be opened, where each endpoint is written as<ip>:>port>

You may also want to set the log location by setting ACL_LOG_PATH. The log location defaults to /tmp/.

As an example, from the installation directory, the tcp control panel is started with:

ACL_SYSTEM_CONTROLLER_IP="10.10.10.10" ACL_SYSTEM_CONTROLLER_PORT=8080 ACL_SYSTEM_CONTROLLER_PSK="FBCZeWB3kMj5me58jmcpmEd3bcPZbp4q" ACL_TCP_ENDPOINT=0.0.0.0:7000 ACL_CONTROL_PANEL_NAME="My TCP Control Panel" ./acl-tcpcontrolpanel

Websocket control panel

acl-websocketcontrolpanel is a control panel application that acts as a websocket server, and relays control commands that come in over websockets that are opened typically by web-based GUIs.

The basic settings are the same as for the tcp control panel. But to specify where the websocket server shall open an endpoint, use this environment variable:

ACL_WEBSOCKET_ENDPOINTis the endpoint to listen for incoming messages on. On the form<ip>:<port>

You may also want to set the log location by setting ACL_LOG_PATH. The log location defaults to /tmp/.

An example of how to start it is the following:

ACL_SYSTEM_CONTROLLER_IP="10.10.10.10" ACL_SYSTEM_CONTROLLER_PORT=8080 ACL_SYSTEM_CONTROLLER_PSK="FBCZeWB3kMj5me58jmcpmEd3bcPZbp4q ACL_WEBSOCKET_ENDPOINT=0.0.0.0:6999 ACL_CONTROL_PANEL_NAME="My Websocket Control Panel" ./acl-websocketcontrolpanel

Manual control panel

acl-manualcontrolpanel is a basic Control Panel application operated from the command line.

The basic settings are the same as for the other generic control panels but it does not open any network endpoints for input, instead the control commands are written in the terminal. It can be started with:

ACL_SYSTEM_CONTROLLER_IP="10.10.10.10" ACL_SYSTEM_CONTROLLER_PORT=8080 ACL_SYSTEM_CONTROLLER_PSK="FBCZeWB3kMj5me58jmcpmEd3bcPZbp4q ACL_CONTROL_PANEL_NAME="My Manual Control Panel" ./acl-manualcontrolpanel

3.3.1 - NTP

To ensure that all ingest servers and production pipelines are in sync, a clock synchronization protocol called NTP is required. Historically that has been handled by a systemd service service called timesyncd.

Chrony

Starting from version 4.0.0 of Agile Live the required NTP client has switched from systemd-timesyncd to chrony. Chrony is installed by defualt in many Linux distributions since it has a lot of advantages over using systemd-timesyncd. Some of the advantages are listed below.

- Full NTP support (systemd-timesyncd only supports Simple NTP)

- Syncing against multiple servers

- More complex but more accurate

- Gradually adjusts the system time instead of hard steps

Installation

Install chrony with:

$ sudo apt install chrony

This will replace systemd-timesyncd if installed.

NTP servers

The default configuration for chrony uses a pool of 8 NTP servers to

synchronize against. It is recommended to use these default servers hosted by Ubuntu.

Verify that the configuration is valid by checking the configuration file after

installing.

$ cat /etc/chrony/chrony.conf

...

pool ntp.ubuntu.com iburst maxsources 4

pool 0.ubuntu.pool.ntp.org iburst maxsources 1

pool 1.ubuntu.pool.ntp.org iburst maxsources 1

pool 2.ubuntu.pool.ntp.org iburst maxsources 2

...

The file should also contain the line below for the correct handling of leap seconds. It is used to set the leap second timezone to get the correct TAI time.

leapsectz right/UTC

Useful Commands

To get detailed information on how the system time is running compared to NTP,

use the chrony client chronyc. The subcommand tracking shows information

about the current system time and the selected time server used.

$ chronyc tracking Reference ID : C23ACA14 (sth1.ntp.netnod.se) Stratum : 2 Ref time (UTC) : Wed Mar 08 07:23:37 2023 System time : 0.000662344 seconds slow of NTP time Last offset : -0.000669114 seconds RMS offset : 0.001053432 seconds Frequency : 10.476 ppm slow Residual freq : +0.001 ppm Skew : 0.295 ppm Root delay : 0.007340557 seconds Root dispersion : 0.001045418 seconds Update interval : 1028.7 seconds Leap status : Normal

The sources subcommand to get a list of the other time

servers that chrony uses.

$ chronyc sources -v

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current best, '+' = combined, '-' = not combined,

| / 'x' = may be in error, '~' = too variable, '?' = unusable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^- prod-ntp-5.ntp4.ps5.cano> 2 6 17 8 +59us[ -11us] +/- 23ms

^- pugot.canonical.com 2 6 17 7 -1881us[-1951us] +/- 48ms

^+ prod-ntp-4.ntp1.ps5.cano> 2 6 17 7 -1872us[-1942us] +/- 24ms

^+ prod-ntp-3.ntp1.ps5.cano> 2 6 17 6 +878us[ +808us] +/- 23ms

^- ntp4.flashdance.cx 2 6 17 7 -107us[ -176us] +/- 34ms

^+ time.cloudflare.com 3 6 17 8 -379us[ -449us] +/- 5230us

^* time.cloudflare.com 3 6 17 8 +247us[ +177us] +/- 5470us

^- ntp5.flashdance.cx 2 6 17 7 -1334us[-1404us] +/- 34ms

Leap seconds

Due to small variations in the Earth’s rotation speed a one second time adjustment is occasionally introduced in UTC (Coordinated Universal Time). This is usually handled by stepping back the clock in the NTP server one second. These hard steps could cause problems in such a time critical product as video production. To avoid this the system uses a clock known as TAI (International Atomic Time), which does not introduce any leap seconds, and is therefore slightly offset from UTC (37 seconds in 2023).

Leap smearing

Another technique for handling the introduction of a new leap second is called leap smearing. Instead of stepping back the clock in on big step, the leap second is introduced in many minor microsecond intervals during a longer period, usually over 12 to 24 hours. This technique is used by most of the larger cloud providers. Most NTP servers that use leap smearing don’t announce the current TAI offset and will thereby cause issues if used in combination with systems that are using TAI time. It is therefore highly recommended to use Ubuntu’s default NTP servers as described above, as none of these use leap smearing. Mixing leap smearing and non-leap smearing time servers will result in that components in the system will have clocks that are off from each other by 37 seconds (as of 2023), as the ones using leap smearing time servers without TAI offset will set the TAI clock to UTC.

3.3.2 - Linux settings

UDP buffers

To improve the performance while receiving and sending multiple streams, and not overflow UDP buffers (leading to receive buffer errors as identified using e.g. ‘netstat -suna’), it is recommended to adjust some UDP buffer settings on the host system.

Recommended value of the buffers is 16MB, but different setups may need larger buffers or would suffice with lower values. To change the buffer values issue:

sudo sysctl -w net.core.rmem_default=16000000

sudo sysctl -w net.core.rmem_max=16000000

sudo sysctl -w net.core.wmem_default=16000000

sudo sysctl -w net.core.wmem_max=16000000

To make the changes persistant edit/add the values to /etc/sysctl.conf:

net.core.rmem_default=16000000

net.core.rmem_max=16000000

net.core.wmem_default=16000000

net.core.wmem_max=16000000

Core dump

On the rare occasion that a segmentation fault or a similar error should occur, a core dump is generated by the operating system to record the state of the application to aid in troubleshooting. By default these core dumps are handled by the ‘apport’ service in Ubuntu and not written to file. To store them to file instead we recommend installing the systemd core dump service.

sudo apt install systemd-coredump

Core dumps will now be compressed and stored in /var/lib/systemd/coredump.

The systemd core dump package also includes a helpful CLI tool for accessing core dumps.

List all core dumps:

$ coredumpctl list

TIME PID UID GID SIG COREFILE EXE

Thu 2022-10-20 17:24:16 CEST 985485 1007 1008 11 present /usr/bin/example

You can print some basic information from the core dump to send to the Agile Live developer to aid debugging the crash

$ coredumpctl info 985485

In some cases, the developers might want to see the entire core dump to understand the crash, then save the core dump using

$ coredumpctl dump 985485 --output=myfilename.coredump

Core dumps will not persist between reboots.

3.3.3 - Disabling unattended upgrades of CUDA toolkit

CUDA Driver/library mismatch

Per default Ubuntu runs automatic upgrades on APT installed packages every night, a process called unattended upgrades. This will sometimes cause issues when the unattended upgrade process tries to update the CUDA toolkit, as it will be able to update the libraries, but cannot update the driver, as it is in use by some application. The result is that the CUDA driver cannot be used because of the driver-library mismatch and the Agile Live applications will fail to start.

In case an Agile Live application fails to start with some CUDA error, the easiest way to see if this is because of a driver-library mismatch, is to run nvidia-smi in a terminal and check the output:

$ nvidia-smi

Failed to initialize NVML: Driver/library version mismatch

In case this happens, just reboot the machine and the new CUDA driver should be loaded instead of the old one at startup. (It is sometimes possible to manually reload the CUDA driver without rebooting, but this can be troublesome as you have to find all applications using the driver)

How to disable unattended upgrades of CUDA toolkit

One way to get rid of these unpleasant surprises when the applications suddenly no longer can start, without disabling unattended upgrades for other packages, is to blacklist the CUDA toolkit from the unattended upgrades process. This will make Ubuntu still update other packages, but CUDA toolkit will require a manual upgrade, which can be done at a suitable time point.

To blacklist the CUDA driver, open the file /etc/apt/apt.conf.d/50unattended-upgrades in a text editor and look for the Unattended-Upgrade::Package-Blacklist block. Add "cuda" and "(lib)?nvidia" to the list, like so:

// Python regular expressions, matching packages to exclude from upgrading

Unattended-Upgrade::Package-Blacklist {

"cuda";

"(lib)?nvidia";

};

This will disable unattended upgrades for packages with names starting with cuda, libnvidia or just nvidia.

How to manually update CUDA toolkit

Once you want to manually update the CUDA toolkit and the driver, run the following commands:

$ sudo apt update

$ sudo apt upgrade

$ sudo apt install cuda

This will likely trigger the driver-library mismatch in case you run nvidia-smi. Then reboot the machine to reload the new driver.

Sometimes a reboot is required before the cuda package can be installed, so in case the upgrade did not work the first time, try a reboot, then apt install cuda and then reboot again.

3.3.4 - Installing older releases

This page describes a couple of steps in installing the base platform that are specific for releases 5.0.0 and older, and replaces these sections in the main documentation.

Base packages installed via ‘apt’

To make it possible to run the Ingest and Rendering Engine, some packages must be installed. The following base packages can be installed with the apt package manager:

sudo apt -y update && sudo apt -y upgrade && sudo apt -y install libssl3 libfdk-aac2 libopus0 libcpprest2.10 libspdlog1 libfmt8 libsystemd0 chrony libcairo2 systemd-coredump

The systemd-coredump package in the list above is really handy to have installed to be able to debug core dumps later on.

Installing the Agile Live components

Extract the following release artifacts from the release files listed under Releases:

- Ingest application consisting of binary

acl-ingestand shared librarylibacl-ingest.so - Production pipeline library

libacl-productionpipeline.soand associated header files - Rendering engine application

acl-renderingengine - Production control panel interface library

libacl-controldatasender.soand associated header files - Generic control panel applications

acl-manualcontrolpanel,acl-tcpcontrolpanelandacl-websocketcontrolpanel

3.4 - Configuration and monitoring GUI

The following guide will show how to run the configuration and monitoring GUI using Docker compose. The OS specific parts of the guide, how to install Docker and aws-cli, is written for Ubuntu 22.04.

Install Docker Engine

First install Docker on the machine you want to run the GUI and the GUI database:

sudo apt-get update

sudo apt-get install ca-certificates curl gnupg

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

echo \

"deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

"$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

Login to Docker container registry

To access the Agile Docker container registry to pull the GUI image, you need to log in install and log into AWS CLI

Install AWS CLI:

sudo apt-get install unzip

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip

sudo ./aws/install

Configure AWS access, use the access key id and secret access key that you should have received from Agile Content.

Set region to eu-north-1 and leave output format as None (just hit enter):

aws configure --profile agile-live-gui

AWS Access Key ID [None]: AKIA****************

AWS Secret Access Key [None]: ****************************************

Default region name [None]: eu-north-1

Default output format [None]:

Login to Agile’s AWS Elastic Container Repository to be able to pull the container image: 263637530387.dkr.ecr.eu-north-1.amazonaws.com/agile-live-gui. If your user is in the docker group, sudo part can be skipped.

aws --profile agile-live-gui ecr get-login-password | sudo docker login --username AWS --password-stdin 263637530387.dkr.ecr.eu-north-1.amazonaws.com

MongoDB configuration

The GUI uses MongoDB to store information. When starting up the MongoDB Docker container it will create a database user that the GUI backend will use to communicate with the Mongo database.

Create the file mongo-init.js for the API user (replace <API_USER> and <API_PASSWORD> with a username and password of your choice):

productionsDb = db.getSiblingDB('agile-live-gui');

productionsDb.createUser({

user: '<API_USER>',

pwd: '<API_PASSWORD>',

roles: [{ role: 'readWrite', db: 'agile-live-gui' }]

});

Create a docker-compose.yml file

Create a file docker-compose.yml to start the GUI backend and the MongoDB instance. Give the file the following content:

version: '3.7'

services:

agileui:

image: 263637530387.dkr.ecr.eu-north-1.amazonaws.com/agile-live-gui:latest

container_name: agileui

environment:

MONGODB_URI: mongodb://<API_USER>:<API_PASSWORD>@mongodb:27017/agile-live-gui

AGILE_URL: https://<SYSTEM_CONTROLLER_IP>:<SYSTEM_CONTROLLER_PORT>

AGILE_CREDENTIALS: <SYSTEM_CONTROLLER_USER>:<SYSTEM_CONTROLLER_PASSWORD>

NODE_TLS_REJECT_UNAUTHORIZED: 1

NEXTAUTH_SECRET: <INSERT_GENERATED_SECRET>

NEXTAUTH_URL: http://<SERVER_IP>:<SERVER_PORT>

BCRYPT_SALT_ROUNDS: 10

UI_LANG: en

ports:

- <HOST_PORT>:3000

mongodb:

image: mongo:latest

container_name: mongodb

environment:

MONGO_INITDB_ROOT_USERNAME: <MONGODB_USERNAME>

MONGO_INITDB_ROOT_PASSWORD: <MONGODB_PASSWORD>

ports:

- 27017:27017

volumes:

- ./mongo-init.js:/docker-entrypoint-initdb.d/mongo-init.js:ro

- ./mongodb:/data/db

Details about some of the parameters:

- image - Use

latesttag for always starting the latest version, set to:X.Y.Zto lock on a specific version. - ports - Internally port 3000 is used, open a mapping from your

HOST_PORTof choice to access the GUI at. - extra_hosts - Adding “host.docker.internal:host-gateway” makes it possible for docker container to access host machine from host.docker.internal

- MONGODB_URI - The mongodb connection string including credentials,

API_USERandAPI_PASSWORDshould match what you wrote inmongo-init.js - AGILE_URL - The URL to the Agile-live system controller REST API

- AGILE_CREDENTIALS - Credentials for the Agile-live system controller REST API, used by backend to talk to the Agile-live REST API.

- NODE_TLS_REJECT_UNAUTHORIZED - Set to 0 to disable SSL verification. This is useful if testing using self-signed certs

- NEXTAUTH_SECRET - The secret used to encrypt the JWT Token, can be generated by

openssl rand -base64 32 - NEXTAUTH_URL - The base url for the service, used for redirecting after login, eg. http://my-host-machine-ip:3000

- BCRYPT_SALT_ROUNDS - The number of salt rounds the bcrypt hashing function will perform, you probably don’t want this higher than 13 ( 13 = ~1 hash/second )

- UI_LANG - Set language for the user interface (en|sv). Default is en

- MONGODB_USERNAME - Select a username for a root user in MongoDB

- MONGODB_PASSWORD - Select a password for your root user in MongoDB

HTTPS support

The Agile-live GUI doesn’t have native support for HTTPS. In order to secure the communication to it you need to add something that can handle HTTPS termination in front of it. An example would be a load balancer on AWS or a container running nginx.

nginx example

This is an example nginx config and docker compose service definition that solves the task of redirecting from HTTP to HTTPS and also terminates the HTTPS connection and forwards the request to the Agile-live GUI. For more examples and documentation please visit the official nginx site.

Create an nginx server config in a directory called nginx along side your docker-compose.yml file, and add the file nginx/nginx.conf:

server {

listen 80;

listen [::]:80;

server_name $hostname;

server_tokens off;

location / {

return 301 https://$host$request_uri;

}

}

server {

listen 443 default_server ssl http2;

listen [::]:443 ssl http2;

server_name $hostname;

ssl_certificate /etc/nginx/ssl/live/agileui/cert.pem;

ssl_certificate_key /etc/nginx/ssl/live/agileui/key.pem;

location / {

proxy_pass http://agileui:3000;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Ssl on; # Optional

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header X-Forwarded-Host $host;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

}

}

Store your certificate and key file next to the docker-compose.yml file (cert.pem and key.pem in this example).

Update the docker-compose.yml file to also start the official nginx container, and point it to the nginx.conf, cert.pem and key.pem file via volume mounts.

version: '3.7'

services:

agileui:

image: 263637530387.dkr.ecr.eu-north-1.amazonaws.com/agile-live-gui:latest

container_name: agileui

environment:

MONGODB_URI: mongodb://<API_USER>:<API_PASSWORD>@mongodb:27017/agile-live-gui

AGILE_URL: https://<SYSTEM_CONTROLLER_IP>:<SYSTEM_CONTROLLER_PORT>

AGILE_CREDENTIALS: <SYSTEM_CONTROLLER_USER>:<SYSTEM_CONTROLLER_PASSWORD>

NODE_TLS_REJECT_UNAUTHORIZED: 1

NEXTAUTH_SECRET: <INSERT_GENERATED_SECRET>

NEXTAUTH_URL: https://<SERVER_IP>

BCRYPT_SALT_ROUNDS: 10

UI_LANG: en

ports:

- <HOST_PORT>:3000

webserver:

image: nginx:latest

ports:

- 80:80

- 443:443

restart: always

volumes:

- ./nginx/:/etc/nginx/conf.d/:ro

- ./cert.pem:/etc/nginx/ssl/live/agileui/cert.pem:ro

- ./key.pem:/etc/nginx/ssl/live/agileui/key.pem:ro

mongodb:

image: mongo:latest

container_name: mongodb

environment:

MONGO_INITDB_ROOT_USERNAME: <MONGODB_USERNAME>

MONGO_INITDB_ROOT_PASSWORD: <MONGODB_PASSWORD>

ports:

- 27017:27017

volumes:

- ./mongo-init.js:/docker-entrypoint-initdb.d/mongo-init.js:ro

- ./mongodb:/data/db

Note that the NEXTAUTH_URL parameter now should be the https address and not specify the port, as we use the default HTTP and HTTPS ports (without nginx we specified the internal GUI port, 3000).

Start, stop and signing in to the Agile-live GUI

Start the UI container by running Docker compose in the same directory as the docker-compose.yml file`:

sudo docker compose up -d

Once the containers are started you should now be able to reach the GUI at the host-port that you selected in the docker-compose.yml-file.

To login to the GUI, use username admin and type a password longer than 8 characters. This password will now be stored in the database and