Overview

Agile Live is a software-based platform for production of media using standard, off-the-shelf hardware and datacenter building blocks.

The Agile Live system consists of multiple software components, distributed geographically between recording studios, remote production control rooms and datacenters.

All Agile Live components have the same understanding of the time ‘now’. The time reference used by all software components is TAI (Temps Atomique International), which provides a monotonically, steady time reference no matter the geographic location.

This makes it possible to compensate for differences in relative delay between the media streams. The synchronized timing makes the system unique compared to other solutions usually bound to buffer levels and various queueing mechanisms.

The synchronization of the streams transported over networks with various delays makes it possible to build a distributed production environment, where the mixing of the video and audio sources can take place at a different location compared to the studio where they were captured.

System overview

Agile Live components

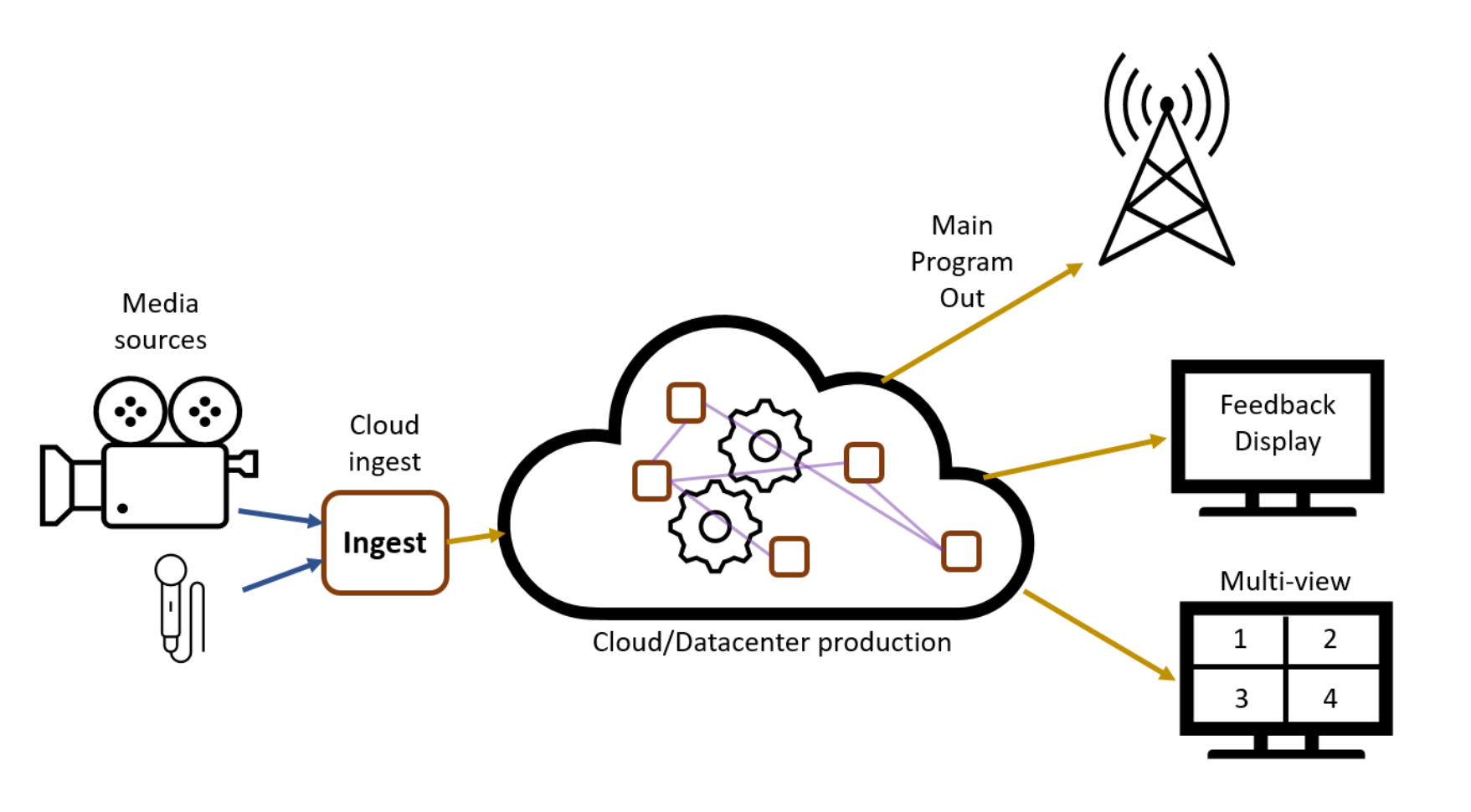

Media sources, such as video cameras and microphones, are connected to a computer called Ingest. In practice, this will usually be some kind of off-the-shelf desktop computer, potentially equipped with a frame grabber card to allow physical input interfaces such as SDI cables, but it could also be a smartphone with a dedicated app using the built-in camera.

The Ingest is responsible for receiving the media data from the source, encode it and transport it using a network to the production environment inside the cloud/datacenter.

From the datacenter the remote production team can get multi-view streams of the available media sources to base their production decisions on, as well as a low-delay feedback display showing the result of the production.

The production in the datacenter will also output a high quality program output, which is broadcast to the viewers. Both the multi-views and the feedback display will usually have a lower quality compared to the high quality program output that is broadcast to the viewers. This is to allow an as short as possible end-to-end delay between what happens in front of the cameras in the studio and the output stream on the feedback display.

This in turn allows for feedback loops back to the studio, for instance for video cameras that are remotely controlled by the production team based on what they see on the multi-views, or having additional feedback displays in the studio.