Introduction to Agile Live technology

Agile Live is a software-based platform and solution for live media production that enables remote and distributed workflows in software running on standard, off-the-shelf hardware. It can be deployed on-premises or in private or public cloud.

The system is built with an ‘API first’ philosophy throughout. It is built in several stacked conceptual layers, and a customer may choose how many layers to include in their solution, depending on which customisations they wish to do themselves. It is implemented in a distributed manner with a number of different software binaries the customer is responsible for running on their own hardware and operating system. If using all layers, the full solution provides media capture, synchronised contribution, video & audio mixing including Digital Video Effects and HTML-based graphics overlay, various production control panel integrations and a fully customisable multiview, primary distribution and a management & orchestration API layer with a configuration & monitoring GUI on top of it.

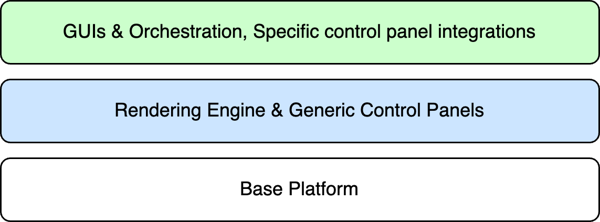

The layers of the platform are the “Base Layer”, the “Rendering Engine & Generic Control Panels” layer and the “GUIs & Orchestration, Specific Control Panels” layer.

Agile Live Layers

The base layer

The base layer of the platform can in a very simplified way be described as being responsible for transporting media of diverse types from many different locations into a central location in perfect sync, present it there to higher layer software that processes it in some way, and to distribute the end result. This piece of software, which is not a part of the base layer, is referred to as a “rendering engine”. The base layer also carries control commands from remote control panels into the rendering engine, without having any knowledge of the actual control command syntax.

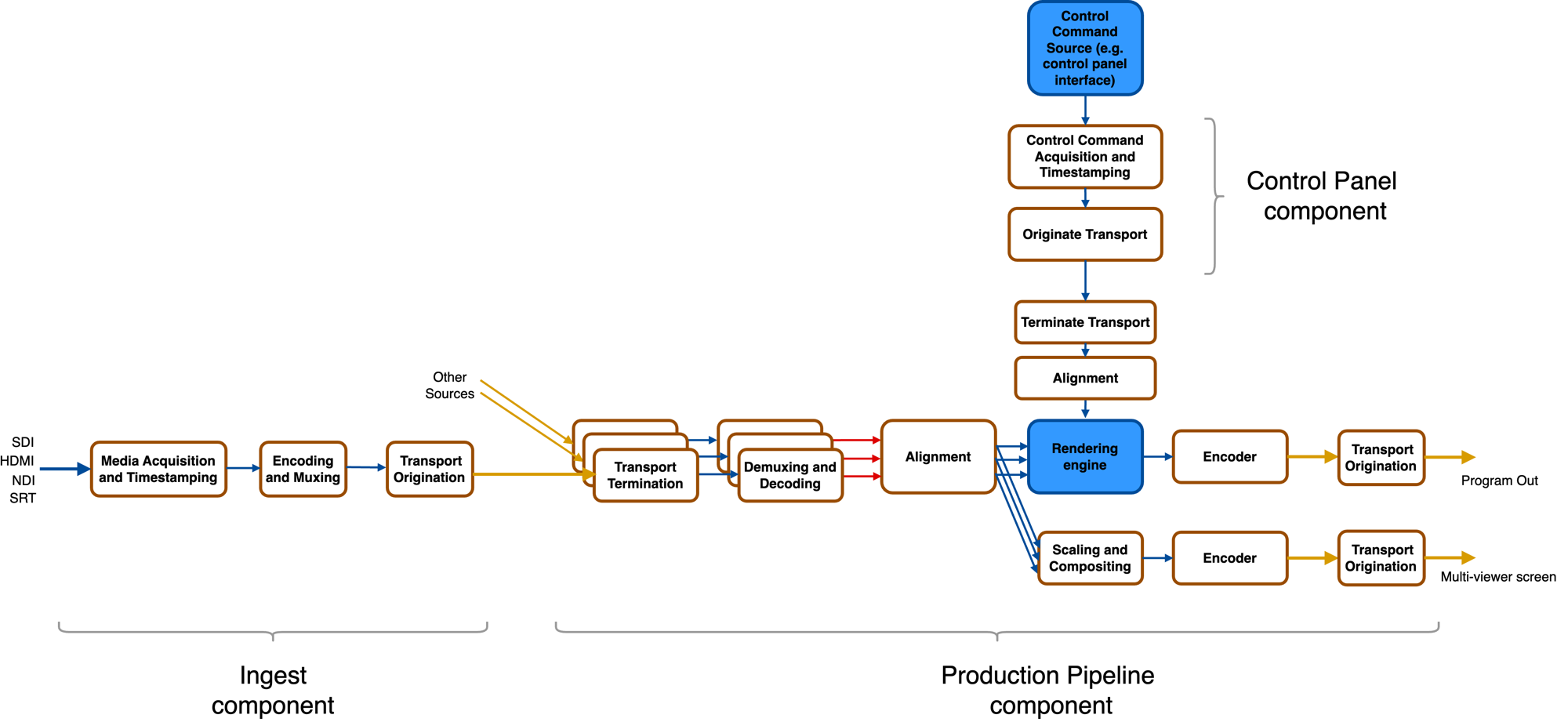

The following image shows a schematic of the roles played by the base layer. The parts in blue are higher layer functionality.

Base Layer

The base layer is separated into three components based on their different roles in a distributed computing system:

- the Ingest component handles acquisition of the media sources and timestamping of media frames, followed by encoding, muxing and origination of reliable transport over unreliable networks using SRT or RIST

- the Production Pipeline component handles terminating all incoming media streams from ingests, demuxing and decoding, aligning all the sources according to timestamps and feeding them synchronously into the rendering engine. It also receives the resulting media frames from the rendering engine and encodes, muxes and streams out the media streams using MPEG-TS over UDP or SRT. It constructs a multiview from the aligned sources by fully customizable scaling and compositing. Finally, it receives control commands from the control panel component, aligns them in time with the media sources and feeds them into the rendering engine. NOTE that the rendering engine is NOT a part of the base layer, and must be supplied by a higher layer or by the implementer themselves.

- The control panel component is responsible for acquiring control command data (the internal syntax of which it has no knowledge of), timestamp it, and send it to the production pipeline component.

The base layer is delivered as libraries and header files, to be used to build binaries that make up the platform. The ingest component is for convenience also delivered as a precompiled binary named acl-ingest as there is very little customization to be done around it at the current moment.

The functionalities in the base layer are managed via a REST API. A separate software program called the System Controller acts as a REST service and communicates with the rest of the Base Layer software to control and monitor it. The REST API is Open API 2.0 compliant, and is documented here. Apart from the three components (ingest, production pipeline, controlpanel), the concepts of a Stream (transporting one source from one ingest to one production pipeline using one specific set of properties) and a controlconnection (transporting control commands from one control panel to one production pipeline or from one production pipeline to another) are central to the REST API.

The rendering engine and generic control panels layer

Though it is fully possible to use only the base layer and to build everything else yourself, a very capable version of a rendering engine is supplied. Our rendering engine implementation consists of a modular video mixer, an audio router and mixer, an interface towards 3rd party audio mixers, and an HTML renderer to overlay HTML-based graphics. It defines a control protocol for configuration and control which can be used by specific control panel implementations.

The rendering engine is delivered bundled with the production pipeline component as a binary named acl-renderingengine. This is because the production pipeline component from the base layer is only delivered as a library that the rendering engine implementation makes use of.

Communication with the rendering engine for configuration and control takes place over the control connections from control panels, as provided by the base layer. The control panels are contructed by using the control panel library, but to assist in getting up and running fast, a few generic control panels are provided, that will act as proxies for control commands. There is one that sets up a TCP server and accepts control commands over connections to it, and another one that acts as a websocket server for the same purpose. Finally there is one that takes commands over stdin from a terminal session forwards control commands.

The GUI and orchestration layer

On top of the REST API it is possible to build GUIs for configuring and monitoring the system. One default GUI is provided.

Prometheus-exporters can be configured to report on the details of the machines in the system, and there is also an exporter for the REST API statistics. The data in Prometheus can then be presented in Grafana.

Using the control command protocol defined by our rendering engine and either the generic control panels or custom control panel integrations, GUIs can be constructed to configure and control the components inside the rendering engine. For example, Bitfocus Companion can be configured to send control commands to the tcpcontrolpanel which will send them further into the system. The custom integration audio_control_gui can be used to interface with audio panels that support the Mackie Control protocol and to send control commands to the tcpcontrolpanel.

Direct editing mode vs proxy editing mode

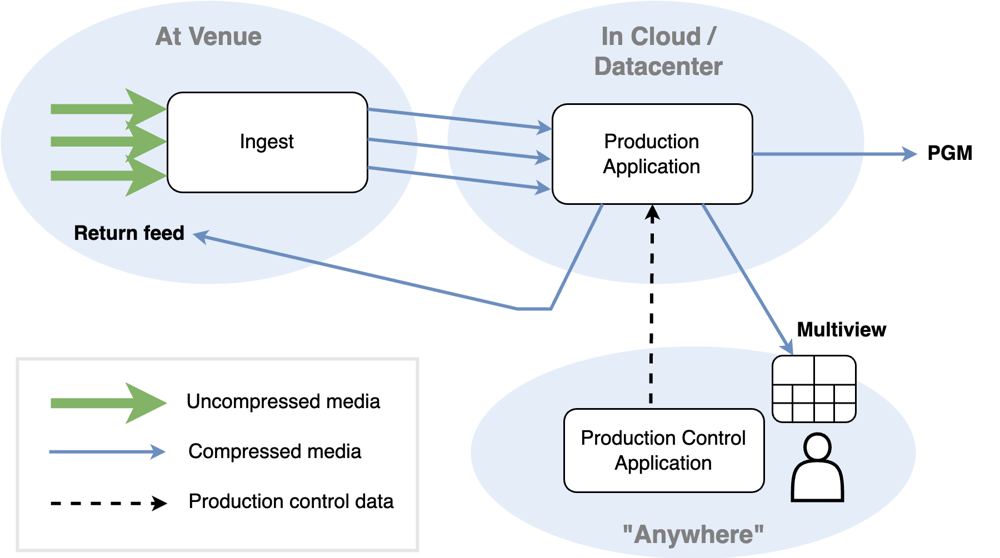

The components of the Agile Live platform can be combined in many different ways. The two most common ways is the Direct Editing Mode and the Proxy Editing Mode. The direct editing mode is what has commonly been done for remote productions, where the mixer operator edits directly in the flow that goes out to the viewer.

Direct editing mode

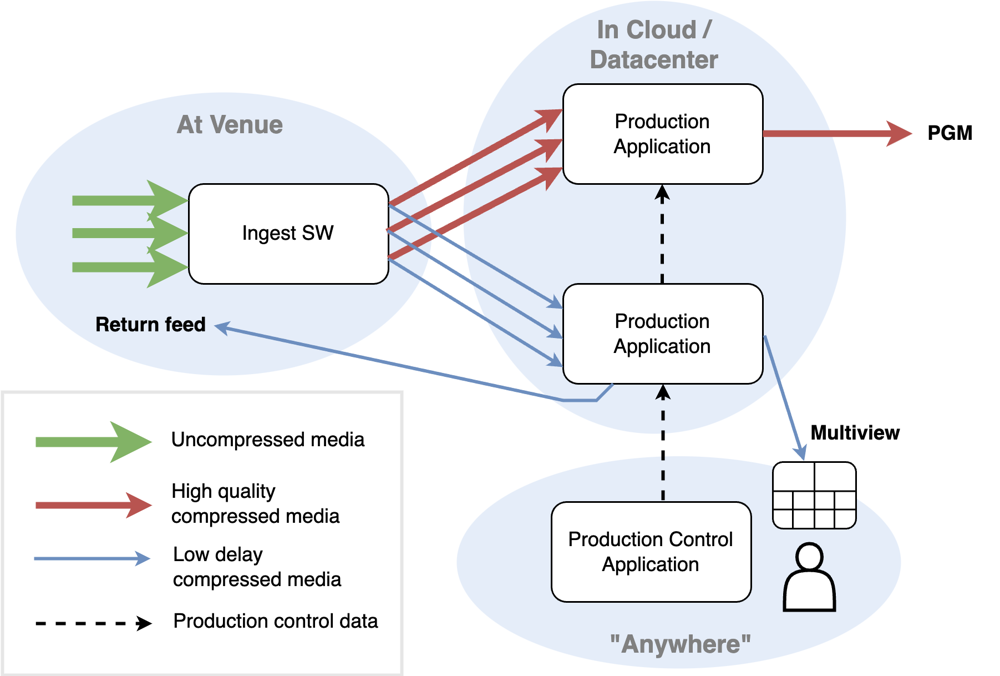

In the proxy editing mode, the operator works with a low delay but lower quality flow, makes mixing decisions, and then those decisions are replicated in a frame accurate manner on a high quality flow that is delayed in time.

Proxy editing mode

There are good reasons for using a proxy editing mode. The mixer operator may be communicating via intercom with personnel at the venue, such as camera operators, or PTZ cameras may be operated remotely. In these cases it is important that the delay from the cameras to the production pipeline and out to the mixer operator is as short as possible. But having a low latency for this flow goes in direct opposition to having a high quality video compression and a very reliable transport. Therefore, in the proxy editing mode, we have both a low delay workflow and a high quality but slower workflow. This way both requirements can be satisfied. This technique is made possible by the synchronization functionalities of the base layer platform.