Statistics in Agile Live

This is a tutorial that explains in more detail how the statistics in Agile Live should be interpreted and how the system measures them.

Statistics

Timestamps and measurements

Let’s start by taking a closer look on how the system measures these statistics:

/ingests/{uuid}/streams/{stream_uuid} {

processing_time_audio,

processing_time_video,

audio_encode_duration,

video_encode_duration

}

/pipelines/{uuid}/streams/{stream_uuid} {

time_to_arrival_audio,

time_to_arrival_video,

time_to_ready_audio,

time_to_ready_video,

audio_decode_duration,

video_decode_duration

}

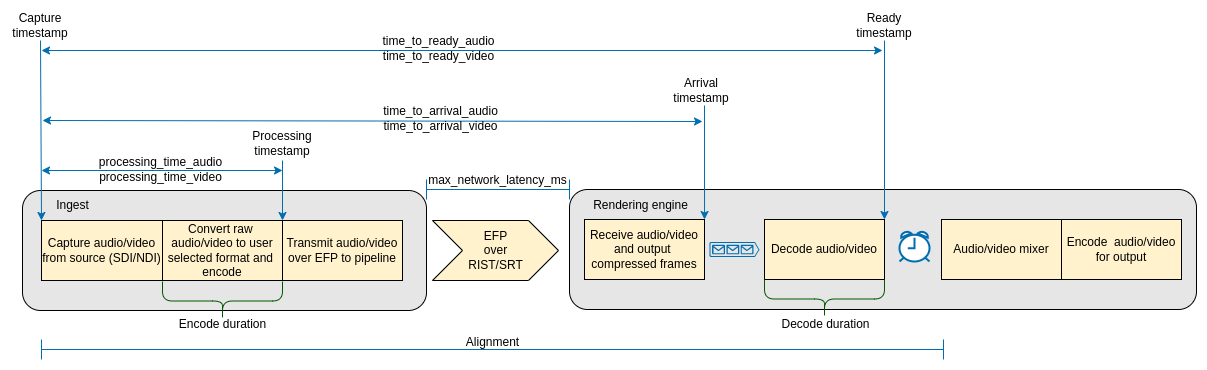

All media that is ingested will be assigned a timestamp as soon as the audio and video is captured, this is the capture timestamp. With this timestamp as a reference the system calculates three more timestamps which all measure the time passed since the capture timestamp was taken.

processing_time_audio & processing_time_video

After the capture step, the audio and video is converted into the format that the user selected, this may involve resizing, deinterlacing and any color format conversion that is needed. Next step will be to encode the audio and video. Once the encoding is done, a new timestamp is taken which then measures the difference between the capture time and the current time after the encoding is done. Note that these values may be negative (and in rare cases the following timestamps), in cases where the system has shifted the capture timestamp into the future to handle drifting.

time_to_arrival_audio & time_to_arrival_video

When the Rendering Engine receives the EFP stream, and has received a full audio or video frame, a new timestamp is taken, which measures the difference between the capture time and the current time after a full frame was received. This duration also includes the network transport time, i.e. the maximum time that the network protocol will use for re-transmissions. This value can be configured with the max_network_latency_ms value when setting up the stream.

time_to_ready_audio & time_to_ready_video

Next step will be to decode the compressed audio and video into raw data. When the media is decoded a new timestamp is taken, which measures the difference between the capture time and the current time after the decoding of a frame is done. This will be when a frame is ready to be delivered to the Rendering Engine. The alignment of the stream between Ingest and Pipeline must be larger than time_to_ready_audio/video statistics, otherwise the frames will be too late and dropped. This is a good value to check if you experience dropped frames, and potentially increase the alignment value if so. You can also experiment with lowering the bitrate or decreasing the max_network_latency_ms setting. If a frame is ready earlier than the aligment time, it will be queued until the system reaches the alignment time. For the video frames there is also a queue of compressed frames before the actual decoding, this queue makes sure that only a maximum of 10 decoded frames are kept in memory after the decoder. Due to this the difference between these timestamps and the alignment value normally is never larger than the duration of 10 video frames.

The sytem also tracks for how long a frame is processed by the encoders and decoders with the following values:

audio_encode_duration & video_encode_duration

These metrics show how long time audio and video frames take to pass through their respective encoder. This duration includes any delay in the encoder as well, measuring the time from when each frame is passed to the encoder until the encoder returns the compressed frame. For video this also includes the time to do any format conversions, resizing and de-interlacing. Note that B-frames will cause the metric to increase to more than one frame-time, as more frames are needed in some cases before the previous ones can be outputted to the stream.

audio_decode_duration & video_decode_duration

These metrics show how long time audio and video frames take to pass through their respective decoder. This duration includes any delay in the decoder as well, measuring the time from when each frame is passed to the decoder until the decoder returns the raw frame. Also here, B-frames will cause this time to be longer than one frame time, because the decoder has to wait for more frames before the decoding can start.